Question: Ridge OLS Now, it is time to code ridge regression ( regularization using the _ ( 2 ) norm ) for OLS. See equation given

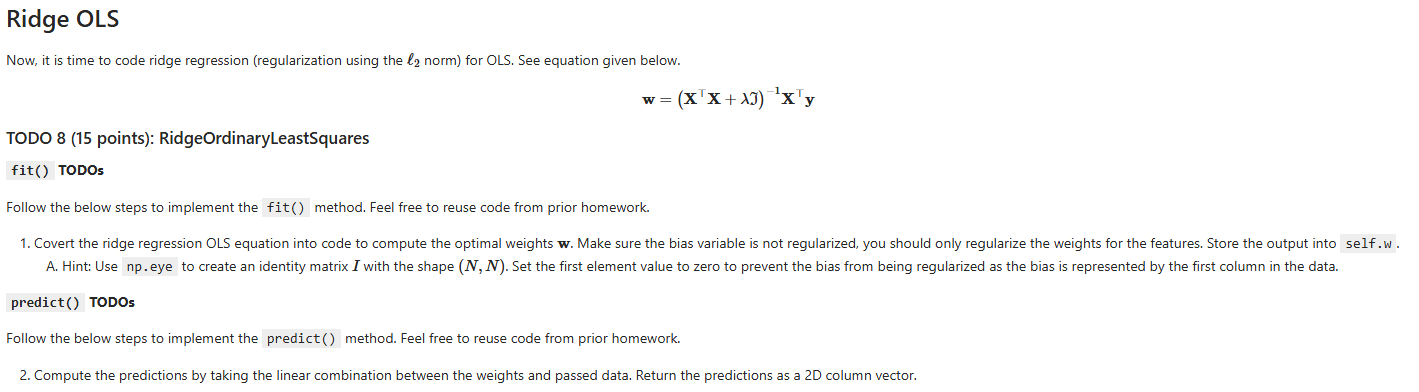

Ridge OLS

Now, it is time to code ridge regression regularization using the norm for OLS. See equation given below.

wXTTXlambdaIXTTy

TODO points: RidgeOrdinaryLeastSquares

fit TODOs

Follow the below steps to implement the fit method. Feel free to reuse code from prior homework.

Covert the ridge regression OLS equation into code to compute the optimal weights w Make sure the bias variable is not regularized, you should only regularize the weights for the features. Store the output into self.w

A Hint: Use np eye to create an identity matrix I with the shape NN Set the first element value to zero to prevent the bias from being regularized as the bias is represented by the first column in the data.

predict TODOs

Follow the below steps to implement the predict method. Feel free to reuse code from prior homework.

Compute the predictions by taking the linear combination between the weights and passed data. Return the predictions as a D column vector.

class RidgeOrdinaryLeastSquares:

Perfroms ordinary least squares regression

Attributes:

lamb float: Regularization parameter for controlling

L regularization.

w: Vector of weights

def initself lamb: float:

self.lamb lamb

self.w None

def fitself X: npndarray, y: npndarray object:

Train OLS to learn optimal weights

Args:

X: Training data given as a D matrix

y: Training labels given as a D vector

Returns:

The class's own object reference.

# TODO

return self

def predictself X: npndarray npndarray:

Make predictions using learned weights

Args:

X: Testing data given as a D matrix

Returns:

A D column vector of predictions for each data sample in X

# TODO

return None

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock