Question: Seeking your assistance in solving the second bullet point correctly. I am not clearly understanding if cudaMemcpyHostToDevice will copy over a variable value from CPU

Seeking your assistance in solving the second bullet point correctly. I am not clearly understanding if cudaMemcpyHostToDevice will copy over a variable value from CPU System Shared Memory? to GPU Global Shared Memory on the device? My understanding is that both the matrix A and the vector b needs to be copied over to GPU in this scenario, and the copy destination is the Global Shared Memory, and then, b needs to be copied over to the shared memory on each Streaming Multiprocessor. And the host code may have these actions within:

Allocate memory for matrix A and vector b using cudaMalloc

Copy A b and c into GPU via cudaMalloc

Launch kernel threads by invoking global matrixVectorMultiply which I need to write. dimGrid and dimBlock need to be set before invoking matrixVectorMultiply should the argument to dimGrid be K meaning and dimBlock here?

Please advise

Attached Problem

points

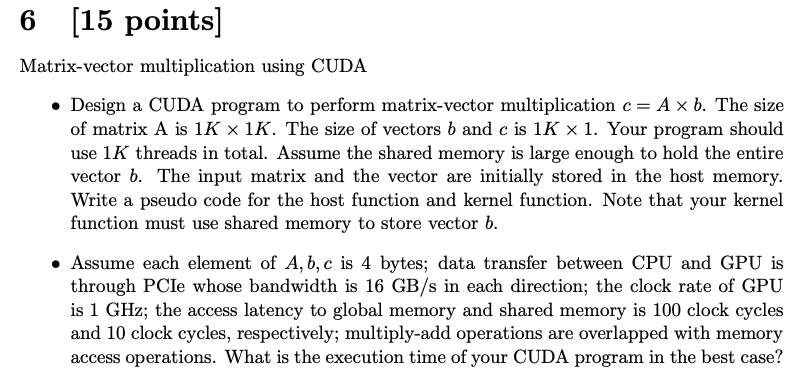

Matrixvector multiplication using CUDA

Design a CUDA program to perform matrixvector multiplication cA xx b The size

of matrix A is K xxK The size of vectors b and c is K xx Your program should

use K threads in total. Assume the shared memory is large enough to hold the entire

vector b The input matrix and the vector are initially stored in the host memory.

Write a pseudo code for the host function and kernel function. Note that your kernel

function must use shared memory to store vector b

Assume each element of Abc is bytes; data transfer between CPU and GPU is

through PCIe whose bandwidth is GBs in each direction; the clock rate of GPU

is GHz ; the access latency to global memory and shared memory is clock cycles

and clock cycles, respectively; multiplyadd operations are overlapped with memory

access operations. What is the execution time of your CUDA program in the best case? points

Matrixvector multiplication using CUDA

Design a CUDA program to perform matrixvector multiplication cA times b The size of matrix A is K times K The size of vectors b and c is K times Your program should use K threads in total. Assume the shared memory is large enough to hold the entire vector b The input matrix and the vector are initially stored in the host memory. Write a pseudo code for the host function and kernel function. Note that your kernel function must use shared memory to store vector b

Assume each element of A b c is bytes; data transfer between CPU and GPU is through PCIe whose bandwidth is mathrm~GBmathrms in each direction; the clock rate of GPU is GHz ; the access latency to global memory and shared memory is clock cycles and clock cycles, respectively; multiplyadd operations are overlapped with memory access operations. What is the execution time of your CUDA program in the best case?

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock