Question: 4. [10 points] On classification, circle T (true) or F (false) for each statement. Note: Bonus points can be given if false statements are

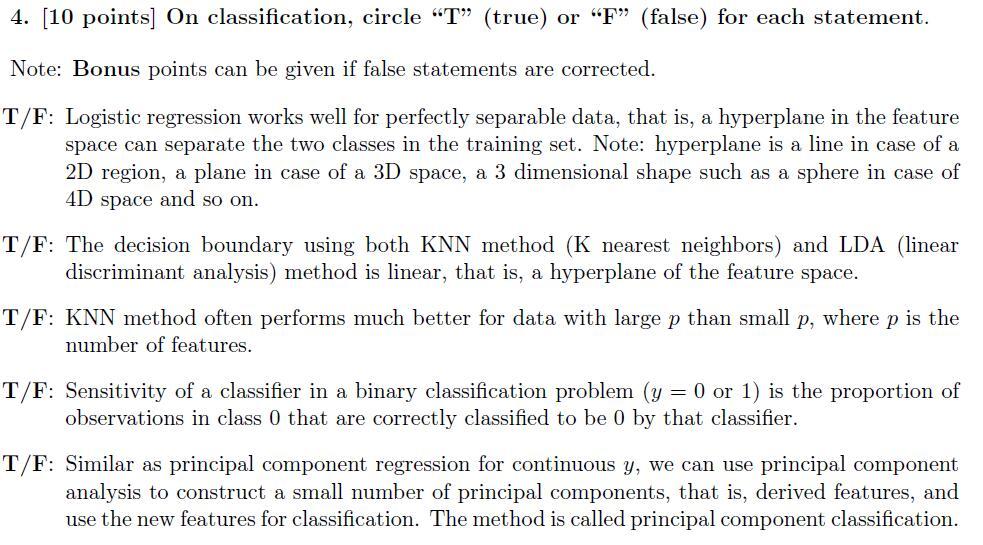

4. [10 points] On classification, circle "T" (true) or "F" (false) for each statement. Note: Bonus points can be given if false statements are corrected. T/F: Logistic regression works well for perfectly separable data, that is, a hyperplane in the feature space can separate the two classes in the training set. Note: hyperplane is a line in case of a 2D region, a plane in case of a 3D space, a 3 dimensional shape such as a sphere in case of 4D space and so on. T/F: The decision boundary using both KNN method (K nearest neighbors) and LDA (linear discriminant analysis) method is linear, that is, a hyperplane of the feature space. T/F: KNN method often performs much better for data with large p than small , where p is the number of features. T/F: Sensitivity of a classifier in a binary classification problem (y = 0 or 1) is the proportion of observations in class 0 that are correctly classified to be 0 by that classifier. T/F: Similar as principal component regression for continuous y, we can use principal component analysis to construct a small number of principal components, that is, derived features, and use the new features for classification. The method is called principal component classification.

Step by Step Solution

3.43 Rating (156 Votes )

There are 3 Steps involved in it

1 True Logistic regression has traditionally been used to come up with a hyperplane tha... View full answer

Get step-by-step solutions from verified subject matter experts