Question: Step 1 We start in the START state ( in the rotunda ) , and we have four action options that represent the four paths

Step

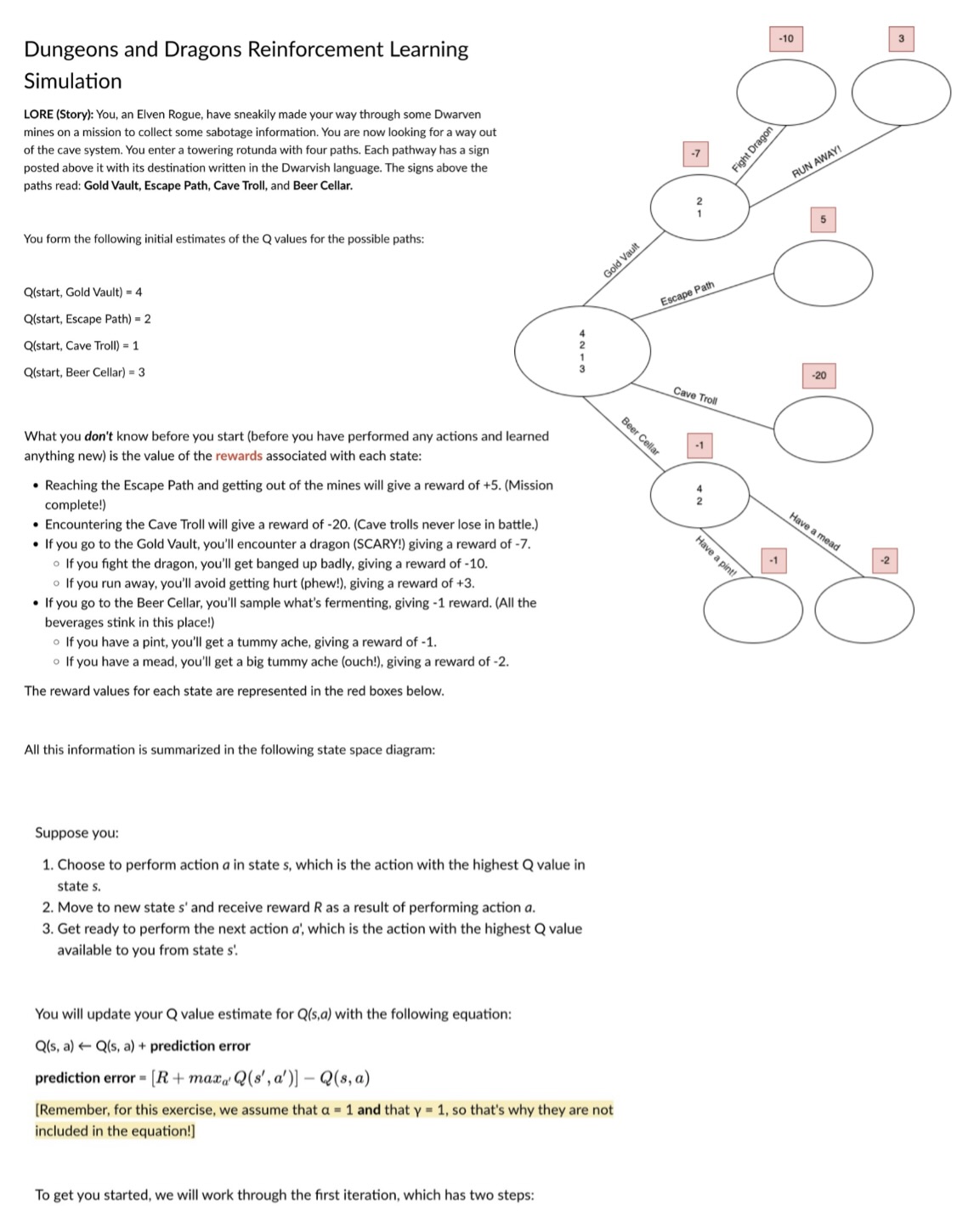

We start in the START state in the rotunda and we have four action options that represent the four paths that we can take through the caves: "Gold VaultEscape Path", Cave Troll and "Beer Cellar". Because our initial value estimate of Qstart Gold Vault is greater than our initial estimates of Qstart Escape Path Qstart Cave Troll and Qstart Beer Cellar we choose the action "Gold Vault We move to state sin vault and upon seeing the dragon in the gold vault SCARY we receive a reward of which was not quite what we expected!

Next, we consider which action to perform from the state in vault. The Qvalue estimates we have for these stateaction pairs are:

QIn Vault, Fight Dragon

QIn Vault, RUN AWAY!

Given that we love a battle, we see that the highest Q value ie maxa'Qs a is given by QIn Vault, Fight Dragon We thus update our initial Q value for choosing to come into the Gold Vault like so:

prediction error

Q start Gold Vault

Step

Now we are in the state in vault", and we have two action options: "fight dragon" and "RUN AWAY!." Because our current estimate of Qin vault, fight dragon is greater than our current estimate of Qin vault, RUN AWAY! we choose to "fight the dragon." This moves us to terminal state a state for which there are no further actions which we can take "end of battle," and gives a reward of That dragon sure messed you up good!

Note: When you are updating Qs a after moving from state s to a terminal state s then Qsa because there are no further possible actions to take in s There are no further actions available once you have chosen to fight the dragon, so the value for Qs a is

We thus update our Q value like so:

prediction error

Qin vault, fight dragon

We define an "iteration" as starting at the START node and reaching a terminal node. After each iteration, you go back to the START state. After this first iteration, here are the new, updated Q values, which reflect what you learned based on the actions you took this time around:

Qstart Gold Vault

Qstart Escape Path

Qstart Cave Troll

Qstart Beer Cellar

Qin vault, Fight Dragon

Qin vault, RUN AWAY!

The context for the questions is in the photos

Using the Q values that you learned after the FIRST iteration, record the updated Q values after the SECOND iteration below:

Qstart Gold Vault

Qstart Escape Path

Qstart Cave Troll

Qstart Beer Cellar

Qin cellar, Have a mead

Qin cellar, Have a pint

Hint: When you transition from sa to sa you'll only update Qsa to reflect what you learned after performing your chosen action and moving to the next state. Not every Q value gets updated every time!

Compare the first iteration with the second iteration, and consider what did and didn't change. Which of the following is true?

Some of the Q values change. True or False

The rewards change. True or False

The actions available from the Start state change. True or False

Using the new Q values from the SECOND iteration, run a THIRD iteration of the simulation and report the latest updated Q values below:

Qstart Gold Vault

Qstart Escape Path

Qstart Cave Troll

Qstart Beer Cellar

Qin cellar, Have a mead

Qin cellar, Have a pint

Using the new Q values from the THIRD iteration, run a FOURTH and final iteration of the simulation.

Now select from the choice below the latest Q values for the following stateaction pairs:

Qstart Gold Vault

Qstart Escape Path

Qstart Cave Troll

Qstart Beer Cellar

a

b

c

d

For this RL simulation, we stipulated that alpha and that gamma

But let's imagine just for this question that when you chose 'Have a mead' during the nd iteration, drinking the mead changed your learning rate, so now alpha while gamma remains the same: gamma What effect would this have on your Qlearning and updating process in later iterations?

a You would learn more slowly and make small changes to your value predictions.

b You would learn quickly and make large changes to your value predictions.

c You would care about future reward much less than present reward.

Which of the following claims isare true about a value function?

A A value function maps from a state to the actual reward received in that state.

B A value function is a prediction about future discounted cumulative reward.

C A value function can be represented as a function Qs a that maps a state and action pair to a predicted future discounted sum of rewards.

D B & C

E A B & C

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock