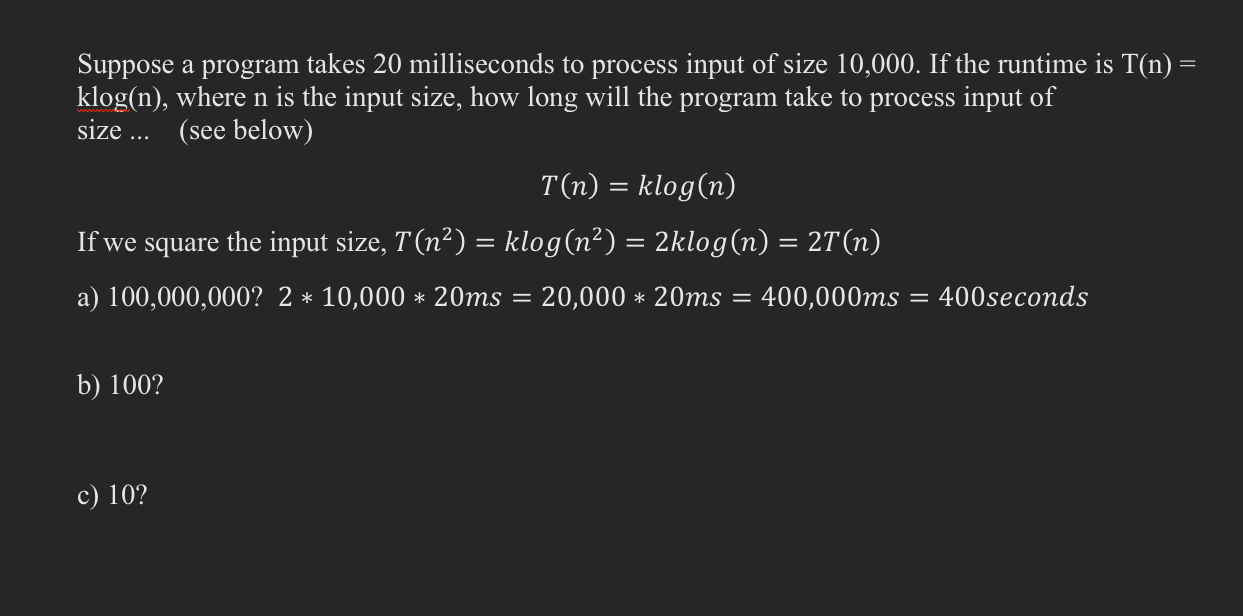

Question: Suppose a program takes 2 0 milliseconds to process input of size 1 0 , 0 0 0 . If the runtime is T (

Suppose a program takes milliseconds to process input of size If the runtime is

klog where is the input size, how long will the program take to process input of

size see below

klog

If we square the input size, klogklog

a seconds

b

c

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock