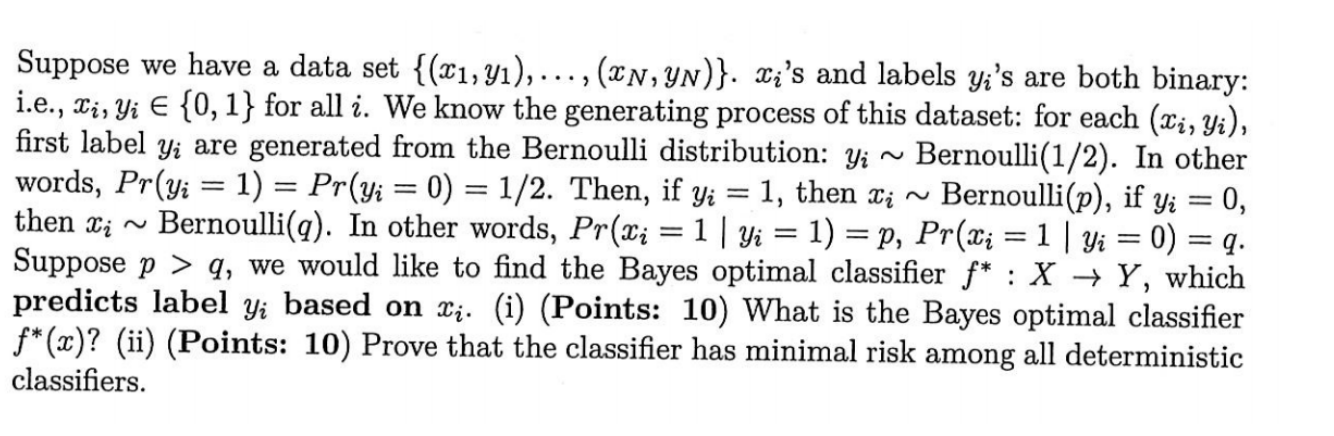

Question: Suppose we have a data set {(x1, y),..., (2N, yn)}. Xi's and labels yi's are both binary: i.e., Li, Yi E {0, 1} for all

Suppose we have a data set {(x1, y),..., (2N, yn)}. Xi's and labels yi's are both binary: i.e., Li, Yi E {0, 1} for all i. We know the generating process of this dataset: for each (Li, Yi), first label yi are generated from the Bernoulli distribution: Yi ~ Bernoulli(1/2). In other words, Pr(yi = 1) = Pr(yi = 0) = 1/2. Then, if yi = 1, then Ti~ Bernoulli(p), if yi = 0, then Xi~ Bernoulli(q). In other words, Pr(xi = 1 | Yi = 1) =p, Pr(di = 1 | Yi = 0) = q. Suppose p > q, we would like to find the Bayes optimal classifier f* :X + Y, which predicts label yi based on ti. (i) (Points: 10) What is the Bayes optimal classifier f*(x)? (ii) (Points: 10) Prove that the classifier has minimal risk among all deterministic classifiers. Suppose we have a data set {(x1, y),..., (2N, yn)}. Xi's and labels yi's are both binary: i.e., Li, Yi E {0, 1} for all i. We know the generating process of this dataset: for each (Li, Yi), first label yi are generated from the Bernoulli distribution: Yi ~ Bernoulli(1/2). In other words, Pr(yi = 1) = Pr(yi = 0) = 1/2. Then, if yi = 1, then Ti~ Bernoulli(p), if yi = 0, then Xi~ Bernoulli(q). In other words, Pr(xi = 1 | Yi = 1) =p, Pr(di = 1 | Yi = 0) = q. Suppose p > q, we would like to find the Bayes optimal classifier f* :X + Y, which predicts label yi based on ti. (i) (Points: 10) What is the Bayes optimal classifier f*(x)? (ii) (Points: 10) Prove that the classifier has minimal risk among all deterministic classifiers

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts