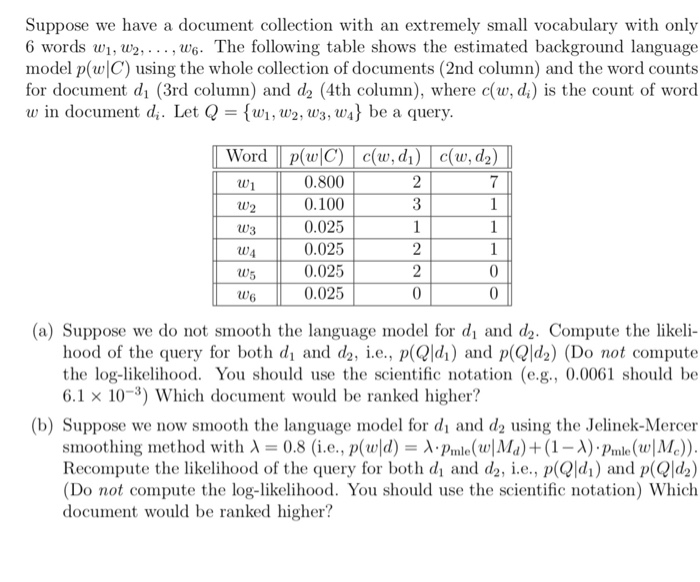

Question: Suppose we have a document collection with an extremely small vocabulary with only 6 words w1, w2,... ,ws. The following table shows the estimated background

Suppose we have a document collection with an extremely small vocabulary with only 6 words w1, w2,... ,ws. The following table shows the estimated background language model p(|C) using the whole collection of documents (2nd column) and the word counts for document di (3rd column) and d2 (4th column), where c(w, di is the count of word w in document di. Let Q -fw1, w2, w3, w) be a query 0.800 0.100 0.025 0.025 0.025 0.025 W3 w) (a) Suppose we do not smooth the language model for di and d2. Compute the likeli- hood of the query for both di and d2, i.e., p(Qldi) and p(Qd2) (Do not compute the log-likelihood. You should use the scientific notation (e.g., 0.0061 should be 6.1 10-3) which document would be ranked higher? (b) Suppose we now smooth the language model for di and d2 using the Jelinek-Mercer smoothing method with 0.8 (i.e. , p(uld-A-Pinle(tul Ma) + (1 A).Mnle(u, Me)) Recompute the likelihood of the query for both di and d2, i.e., p(Qldi) and p(Qd2) (Do not compute the log-likelihood. You should use the scientific notation) Which document would be ranked higher? Suppose we have a document collection with an extremely small vocabulary with only 6 words w1, w2,... ,ws. The following table shows the estimated background language model p(|C) using the whole collection of documents (2nd column) and the word counts for document di (3rd column) and d2 (4th column), where c(w, di is the count of word w in document di. Let Q -fw1, w2, w3, w) be a query 0.800 0.100 0.025 0.025 0.025 0.025 W3 w) (a) Suppose we do not smooth the language model for di and d2. Compute the likeli- hood of the query for both di and d2, i.e., p(Qldi) and p(Qd2) (Do not compute the log-likelihood. You should use the scientific notation (e.g., 0.0061 should be 6.1 10-3) which document would be ranked higher? (b) Suppose we now smooth the language model for di and d2 using the Jelinek-Mercer smoothing method with 0.8 (i.e. , p(uld-A-Pinle(tul Ma) + (1 A).Mnle(u, Me)) Recompute the likelihood of the query for both di and d2, i.e., p(Qldi) and p(Qd2) (Do not compute the log-likelihood. You should use the scientific notation) Which document would be ranked higher

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts