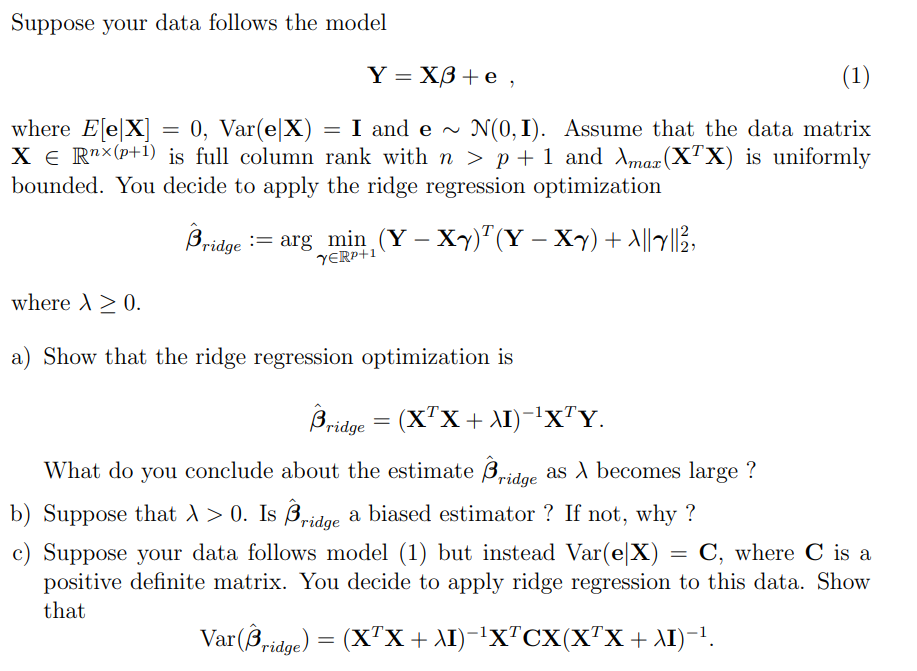

Question: Suppose your data follows the model Y = X+e, = I and e~ (1) where E[e[X] = 0, Var(e|X) N(0, 1). Assume that the

Suppose your data follows the model Y = X+e, = I and e~ (1) where E[e[X] = 0, Var(e|X) N(0, 1). Assume that the data matrix X = Rnx (p+1) is full column rank with n > p + 1 and Amax (XTX) is uniformly bounded. You decide to apply the ridge regression optimization where > 0. Bridge = arg_min (Y - Xy) (Y - Xy) + A||y||1/2, YERP+1 a) Show that the ridge regression optimization is Bridge = (XTX+AI)XTY. What do you conclude about the estimate Bridge as A becomes large? b) Suppose that > 0. Is Bridge a biased estimator ? If not, why ? c) Suppose your data follows model (1) but instead Var(e|X) = C, where C is a positive definite matrix. You decide to apply ridge regression to this data. Show that Var (Bridge) = (XTX + \I)XTCX(XTX + \I).

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts