Question: Task 1 . We have learned that a bagged model has a 2 m error guarantee based on an assumption that each base model suffers

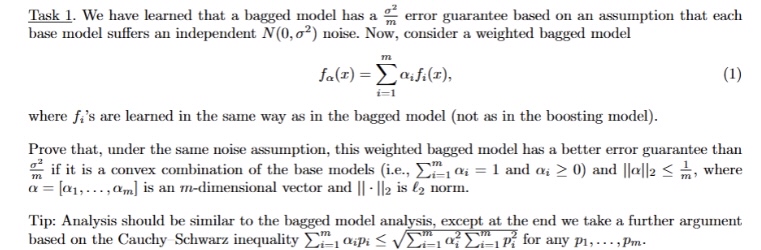

Task We have learned that a bagged model has a error guarantee based on an assumption that each base model suffers an independent noise. Now, consider a weighted bagged model

where s are learned in the same way as in the bagged model not as in the boosting model

Prove that, under the same noise assumption, this weighted bagged model has a better error guarantee than if it is a convex combination of the base models ie and and where dots, is an dimensional vector and is norm.

Tip: Analysis should be similar to the bagged model analysis, except at the end we take a further argument based on the Cauchy Schwarz inequality for any dots,

Please show by hand

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock