Question: thank you for the help Let us consider a binary classification problem with a training data set { (X;, y,) )iemm], where x; E Rd

thank you for the help

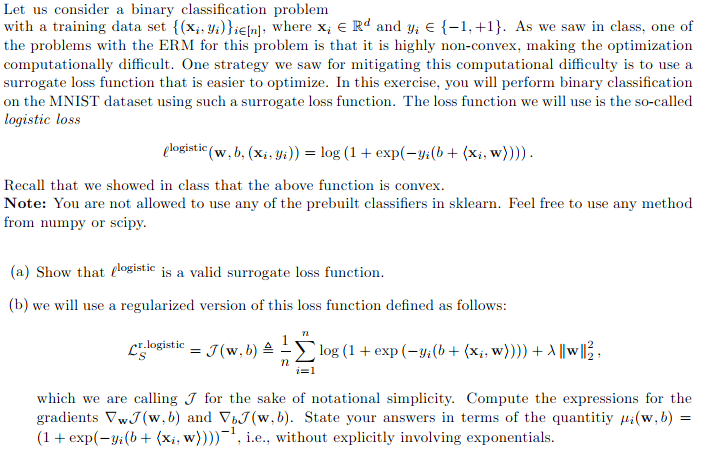

Let us consider a binary classification problem with a training data set { (X;, y,) )iemm], where x; E Rd and y, 6 {-1, +1}. As we saw in class, one of the problems with the ERM for this problem is that it is highly non-convex, making the optimization computationally difficult. One strategy we saw for mitigating this computational difficulty is to use a surrogate loss function that is easier to optimize. In this exercise, you will perform binary classification on the MNIST dataset using such a surrogate loss function. The loss function we will use is the so-called logistic loss plogistic ( w, b, (xi, yi)) = log (1 + exp(-yi(b + (xi, w)))) . Recall that we showed in class that the above function is convex. Note: You are not allowed to use any of the prebuilt classifiers in sklearn. Feel free to use any method from numpy or scipy. (a) Show that plogistic is a valid surrogate loss function. (b) we will use a regularized version of this loss function defined as follows: cr-logistic = 7 (w, b) = _ n Clog (1 + exp (- yi(6 + (x;, w)))) +Allwl13. which we are calling 7 for the sake of notational simplicity. Compute the expressions for the gradients Vw.J(w,b) and VJ(w,b). State your answers in terms of the quantity pi(w, b) = (1 + exp(-yi(b+ (xi, w))))", i.e., without explicitly involving exponentials

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts