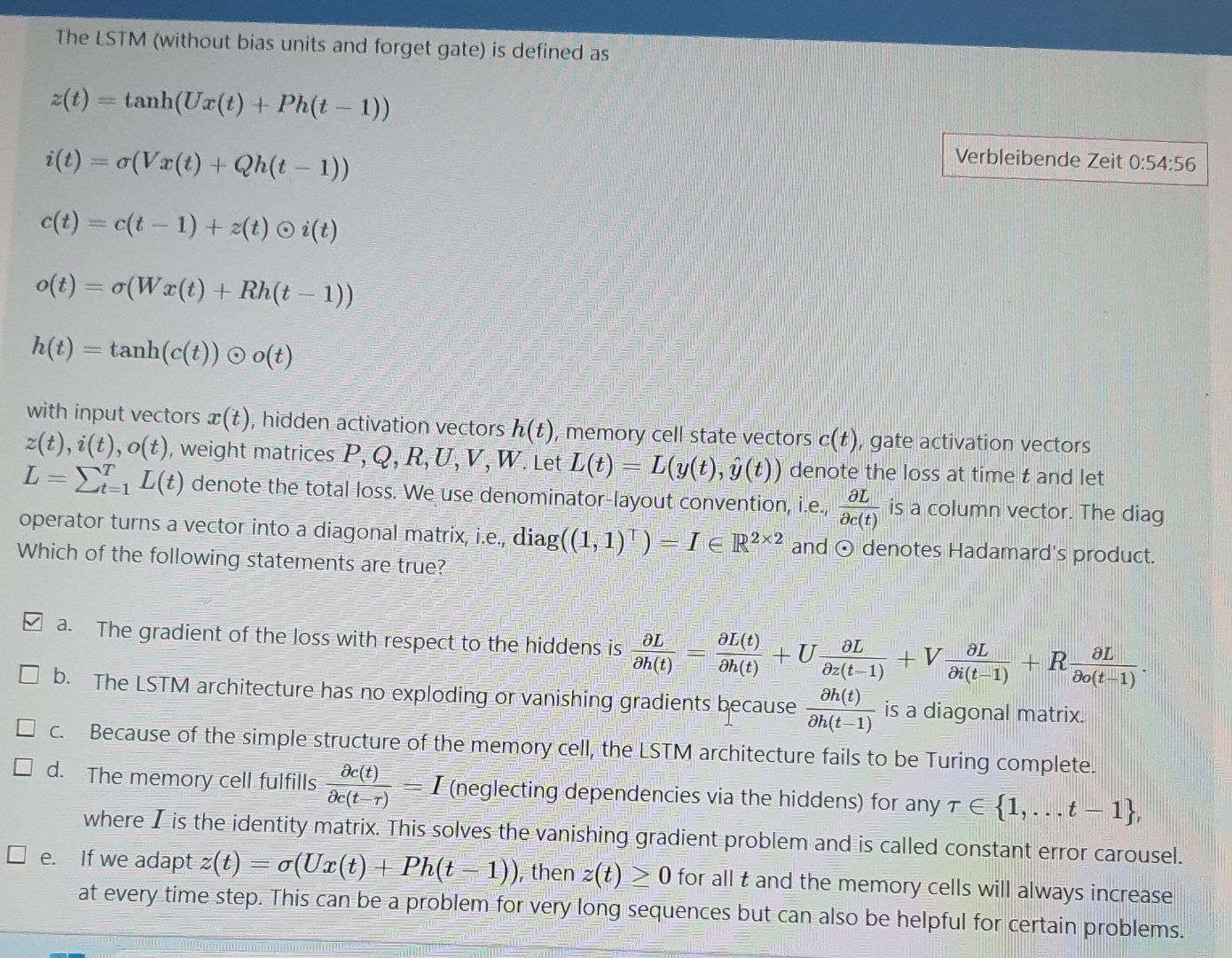

Question: The ISTM ( without bias units and forget gate ) is defined as z ( t ) = t a n h ( U x

The ISTM without bias units and forget gate is defined as

Verbleibende Zeit ::

with input vectors hidden activation vectors memory cell state vectors gate activation vectors weight matrices Let hat denote the loss at time and let denote the total loss. We use denominatorlayout convention, ie is a column vector. The diag operator turns a vector into a diagonal matrix, ie diag and denotes Hadamard's product. Which of the following statements are true?

a The gradient of the loss with respect to the hiddens is

b The LSTM architecture has no exploding or vanishing gradients because is a diagonal matrix.

c Because of the simple structure of the memory cell, the LSTM architecture fails to be Turing complete.

d The memory cell fulfills I neglecting dependencies via the hiddens for any dotst where I is the identity matrix. This solves the vanishing gradient problem and is called constant error carousel.

e If we adapt then for all and the memory cells will always increase at every time step. This can be a problem for very long sequences but can also be helpful for certain problems.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock