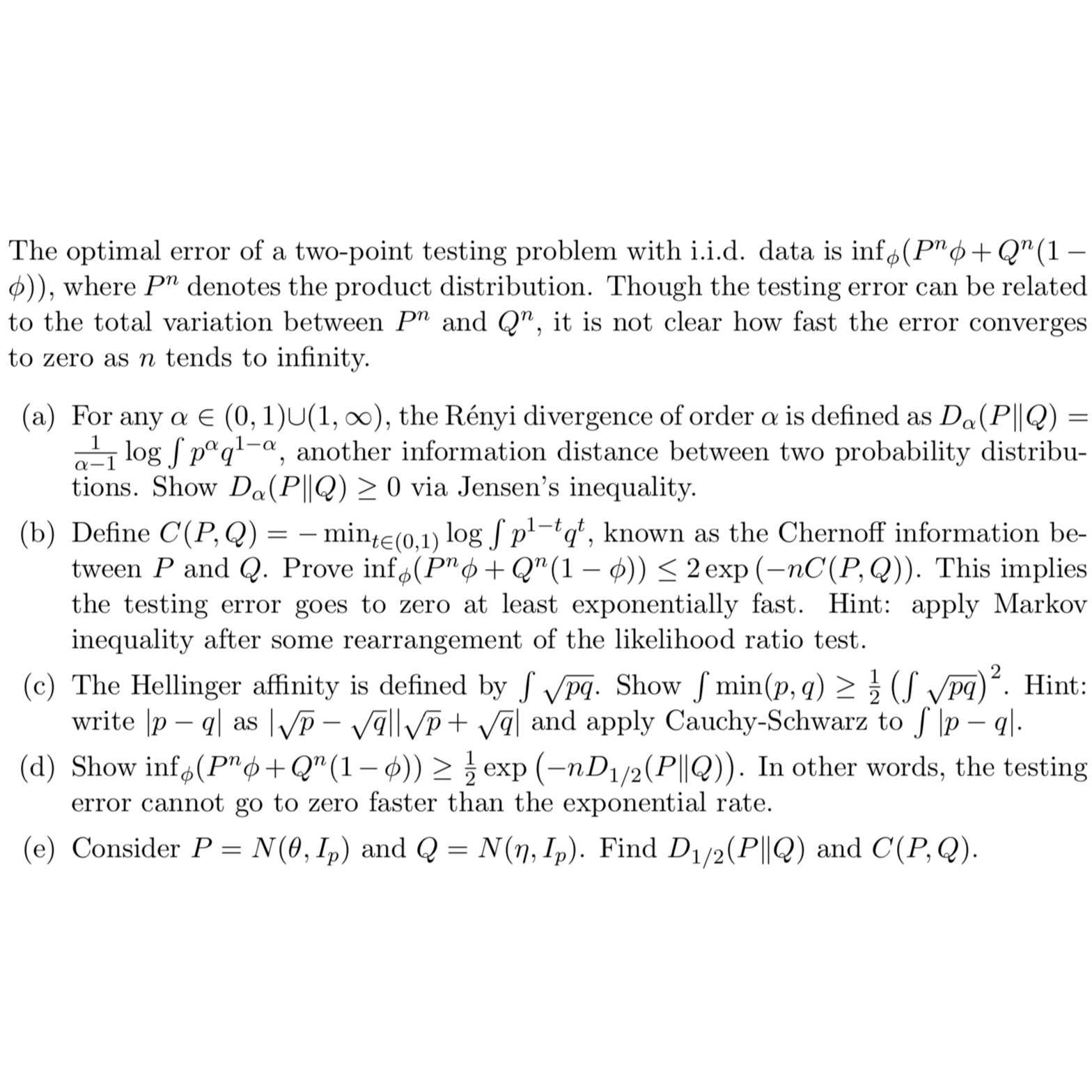

Question: The optimal error of a two-point testing problem with i.i.d. data is phi ), where P^(n) denotes the product distribution. Though the testing error can

The optimal error of a two-point testing problem with i.i.d. data is

\\\\phi ), where

P^(n)denotes the product distribution. Though the testing error can be related to the total variation between

P^(n)and

Q^(n), it is not clear how fast the error converges to zero as

ntends to infinity.\ (a) For any

\\\\alpha in(0,1)\\\\cup (1,\\\\infty ), the Rnyi divergence of order

\\\\alpha is defined as

D_(\\\\alpha )(P||Q)=

(1)/(\\\\alpha -1)log\\\\int p^(\\\\alpha )q^(1-\\\\alpha ), another information distance between two probability distributions. Show

D_(\\\\alpha )(P||Q)>=0via Jensen's inequality.\ (b) Define

C(P,Q)=-min_(tin(0,1))log\\\\int p^(1-t)q^(t), known as the Chernoff information between

Pand

Q. Prove

inf_(\\\\phi )(P^(n)\\\\phi +Q^(n)(1-\\\\phi )). This implies the testing error goes to zero at least exponentially fast. Hint: apply Markov inequality after some rearrangement of the likelihood ratio test.\ (c) The Hellinger affinity is defined by

\\\\int \\\\sqrt(pq). Show

\\\\int min(p,q)>=(1)/(2)(\\\\int \\\\sqrt(pq))^(2). Hint: write

|p-q| as

|\\\\sqrt(p)-\\\\sqrt(q)||\\\\sqrt(p)+\\\\sqrt(q)| and apply Cauchy-Schwarz to

\\\\int |p-q|.\ (d) Show

inf_(\\\\phi )(P^(n)\\\\phi +Q^(n)(1-\\\\phi ))>=(1)/(2)exp(-nD_((1)/(2))(P||Q)). In other words, the testing error cannot go to zero faster than the exponential rate.\ (e) Consider

P=N(\\\\theta ,I_(p)) and

Q=N(\\\\eta ,I_(p)). Find

D_((1)/(2))(P||Q) and

C(P,Q).

The optimal error of a two-point testing problem with i.i.d. data is inf(Pn+Qn(1 ) ), where Pn denotes the product distribution. Though the testing error can be related to the total variation between Pn and Qn, it is not clear how fast the error converges to zero as n tends to infinity. (a) For any (0,1)(1,), the Rnyi divergence of order is defined as D(PQ)= 11logpq1, another information distance between two probability distributions. Show D(PQ)0 via Jensen's inequality. (b) Define C(P,Q)=mint(0,1)logp1tqt, known as the Chernoff information between P and Q. Prove inf(Pn+Qn(1))2exp(nC(P,Q)). This implies the testing error goes to zero at least exponentially fast. Hint: apply Markov inequality after some rearrangement of the likelihood ratio test. (c) The Hellinger affinity is defined by pq. Show min(p,q)21(pq)2. Hint: write pq as pqp+q and apply Cauchy-Schwarz to pq. (d) Show inf(Pn+Qn(1))21exp(nD1/2(PQ)). In other words, the testing error cannot go to zero faster than the exponential rate. (e) Consider P=N(,Ip) and Q=N(,Ip). Find D1/2(PQ) and C(P,Q)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts