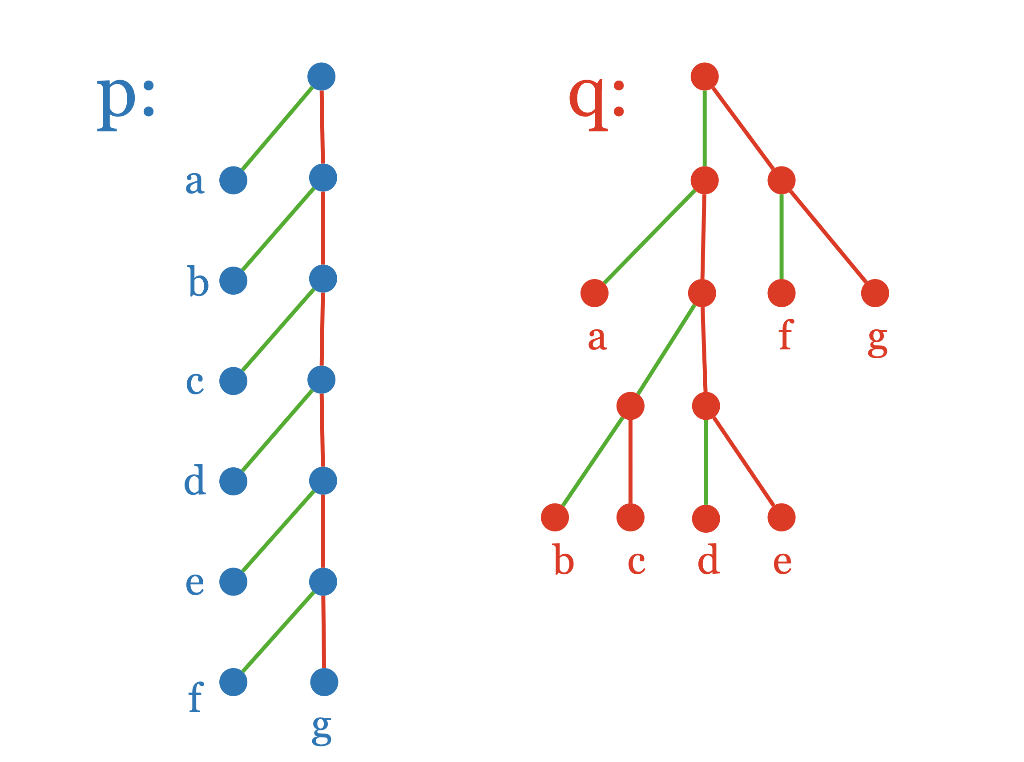

Question: These describe two different probability distributions p and q on the outcomes a through g. Work out the probabilities on the outcomes, and compute following

These describe two different probability distributions p and q on the outcomes a through g. Work out the probabilities on the outcomes, and compute following values. Make sure to use base 2 logarithms.

The entropies of p and q are [ Select ] ["p: - 1.97, q: - 1.5", "p: 1.97, q: 1.5", "p: 1.97, q: 2.5", "p: - 1.97, q: - 2.5"] .

The relative entropy H(p, q) is [ Select ] ["- 2.94", "4.12", "2.94", "-4.12"] .

The relative entropy H(q, p) is [ Select ] ["-0.969", "0.969", "4.12", "-4.12"]

The Kullback-Leibler divergence KL(p, q) is [ Select ] ["0.969", "-0.969", "1.62", "-1.62"]

The Kullback-Leibler divergence KL(q, p) is [ Select ] ["0.969", "2.5", "1.97", "1.62"]

And can you explain how it was done?

p: q: a b a f g do b c d e e f 09

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts