Question: This problem is about matrix operation. In class, I presented the Strassen's algorithm for multiplying two square matrices. Now, we consider a special case of

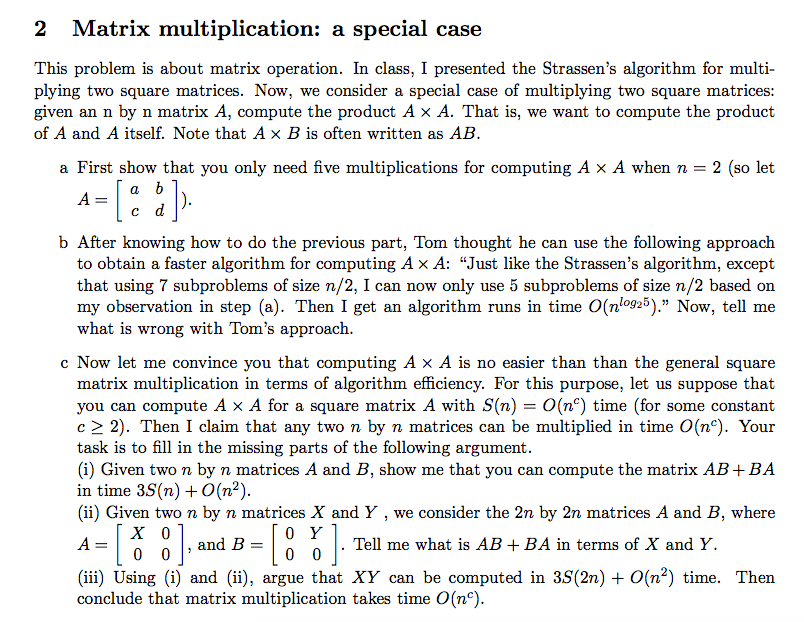

This problem is about matrix operation. In class, I presented the Strassen's algorithm for multiplying two square matrices. Now, we consider a special case of multiplying two square matrices: given an n by n matrix A, compute the product A times A. That is, we want to compute the product of A and A itself. Note that A times B is often written as AB First show that you only need five multiplications for computing A times A when n = 2 (so let A = [a b c d]). After knowing how to do the previous part, Tom thought he can use the following approach to obtain a faster algorithm for computing A times A: "Just like the Strassen's algorithm, except that using 7 subproblems of size n/2, I can now only use 5 subproblems of size n/2 based on my observation in step (a). Then I get an algorithm runs in time O(n^log_2^5)." Now, tell me what is wrong with Tom's approach. Now let me convince you that computing A times A is no easier than the general square matrix multiplication in terms of algorithm efficiency. For this purpose, let us suppose that you can compute A times A for a square matrix A with S(n) = O(n^C) time (for some constants c greaterthanorequalto 2). Then I claim that any two n by n matrices can be multiplied in time O(n^c). Your task is to fill in the missing parts of the following argument Given two n by n matrices A and B, show me that you can compute the matrix AB+ BA in time 3S(n) + O(n^2) Given two n by n matrices X and Y we consider the 2n by 2n matrices A and B, where A = [X 0 0 0], and B = [0 Y 0 0]. Tell me what is AB + BA in terms of X and Y. Using (i) and (ii), argue that XY can be computed in 3S(2n) + O(n^2) time. Then conclude that matrix multiplication takes time O(n^c). This problem is about matrix operation. In class, I presented the Strassen's algorithm for multiplying two square matrices. Now, we consider a special case of multiplying two square matrices: given an n by n matrix A, compute the product A times A. That is, we want to compute the product of A and A itself. Note that A times B is often written as AB First show that you only need five multiplications for computing A times A when n = 2 (so let A = [a b c d]). After knowing how to do the previous part, Tom thought he can use the following approach to obtain a faster algorithm for computing A times A: "Just like the Strassen's algorithm, except that using 7 subproblems of size n/2, I can now only use 5 subproblems of size n/2 based on my observation in step (a). Then I get an algorithm runs in time O(n^log_2^5)." Now, tell me what is wrong with Tom's approach. Now let me convince you that computing A times A is no easier than the general square matrix multiplication in terms of algorithm efficiency. For this purpose, let us suppose that you can compute A times A for a square matrix A with S(n) = O(n^C) time (for some constants c greaterthanorequalto 2). Then I claim that any two n by n matrices can be multiplied in time O(n^c). Your task is to fill in the missing parts of the following argument Given two n by n matrices A and B, show me that you can compute the matrix AB+ BA in time 3S(n) + O(n^2) Given two n by n matrices X and Y we consider the 2n by 2n matrices A and B, where A = [X 0 0 0], and B = [0 Y 0 0]. Tell me what is AB + BA in terms of X and Y. Using (i) and (ii), argue that XY can be computed in 3S(2n) + O(n^2) time. Then conclude that matrix multiplication takes time O(n^c)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts