Question: This question is related to machine learning. 3. Computational graph (no code involved) This question aims at checking your understanding on defining arbitrary network architectures

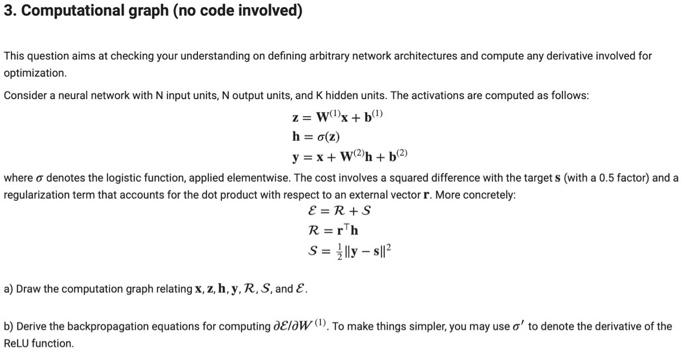

3. Computational graph (no code involved) This question aims at checking your understanding on defining arbitrary network architectures and compute any derivative involved for optimization Consider a neural network with N input units, N output units, and Khidden units. The activations are computed as follows: z = W)x+ b) h = o(z) y = x + Wh+b(2) where o denotes the logistic function, applied elementwise. The cost involves a squared difference with the target s (with a 0.5 factor) and a regularization term that accounts for the dot product with respect to an external vector r. More concretely: E = R+S R=rth S = {lly - sil? a) Draw the computation graph relating x, z, h, y, R, S, and E. b) Derive the backpropagation equations for computing Delaw). To make things simpler, you may use a' to denote the derivative of the ReLU function

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts