Question: Through this problem you will gain an additional insight into the math that enables a more efficient implementation of aspects related to convolutional neural networks

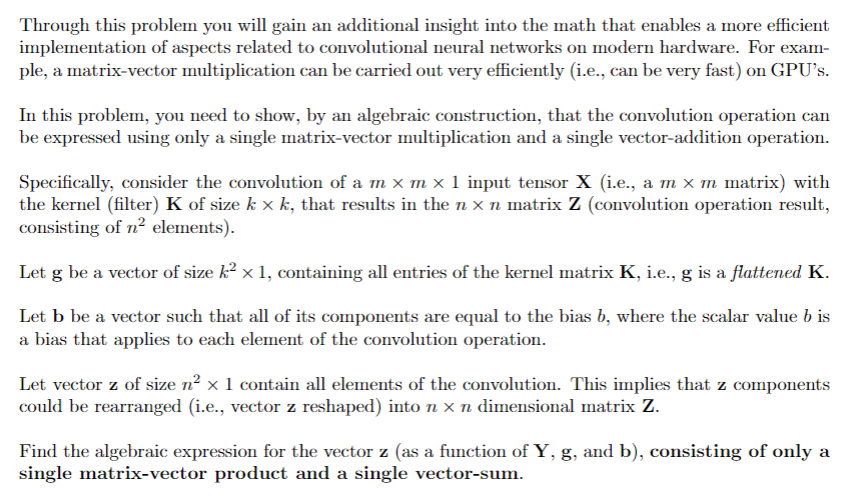

Through this problem you will gain an additional insight into the math that enables a more efficient

implementation of aspects related to convolutional neural networks on modern hardware. For exam

ple, a matrixvector multiplication can be carried out very efficiently ie can be very fast on GPU's.

In this problem, you need to show, by an algebraic construction, that the convolution operation can

be expressed using only a single matrixvector multiplication and a single vectoraddition operation.

Specifically, consider the convolution of a input tensor ie a matrix with

the kernel filter of size that results in the matrix convolution operation result,

consisting of elements

Let be a vector of size containing all entries of the kernel matrix ie is a flattened

Let be a vector such that all of its components are equal to the bias where the scalar value is

a bias that applies to each element of the convolution operation.

Let vector of size contain all elements of the convolution. This implies that components

could be rearranged ie vector reshaped into dimensional matrix

Find the algebraic expression for the vector as a function of and consisting of only a

single matrixvector product and a single vectorsum.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock