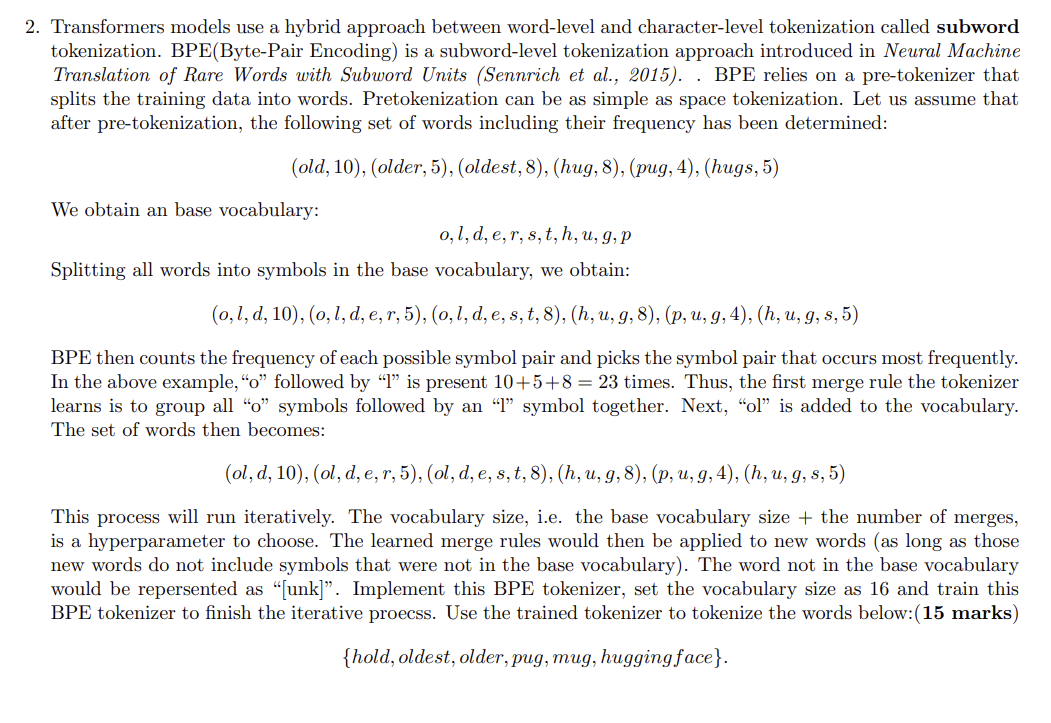

Question: Transformers models use a hybrid approach between word - level and character - level tokenization called subword tokenization. BPE ( Byte - Pair Encoding )

Transformers models use a hybrid approach between wordlevel and characterlevel tokenization called subword

tokenization. BPEBytePair Encoding is a subwordlevel tokenization approach introduced in Neural Machine

Translation of Rare Words with Subword Units Sennrich et al BPE relies on a pretokenizer that

splits the training data into words. Pretokenization can be as simple as space tokenization. Let us assume that

after pretokenization, the following set of words including their frequency has been determined:

oldolderoldesthugpughugs

We obtain an base vocabulary:

Splitting all words into symbols in the base vocabulary, we obtain:

BPE then counts the frequency of each possible symbol pair and picks the symbol pair that occurs most frequently.

In the above example, o followed by l is present times. Thus, the first merge rule the tokenizer

learns is to group all o symbols followed by an l symbol together. Next, ol is added to the vocabulary.

The set of words then becomes:

This process will run iteratively. The vocabulary size, ie the base vocabulary size the number of merges,

is a hyperparameter to choose. The learned merge rules would then be applied to new words as long as those

new words do not include symbols that were not in the base vocabulary The word not in the base vocabulary

would be repersented as unk Implement this BPE tokenizer, set the vocabulary size as and train this

BPE tokenizer to finish the iterative proecss. Use the trained tokenizer to tokenize the words below: marks

holdoldest,older,pug, mug, huggingface

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock