Question: Understand the fundamentals of decision tree. For classification problems, given a simple dataset, know ( 1 ) how to calculate entropy, ( 2 ) how

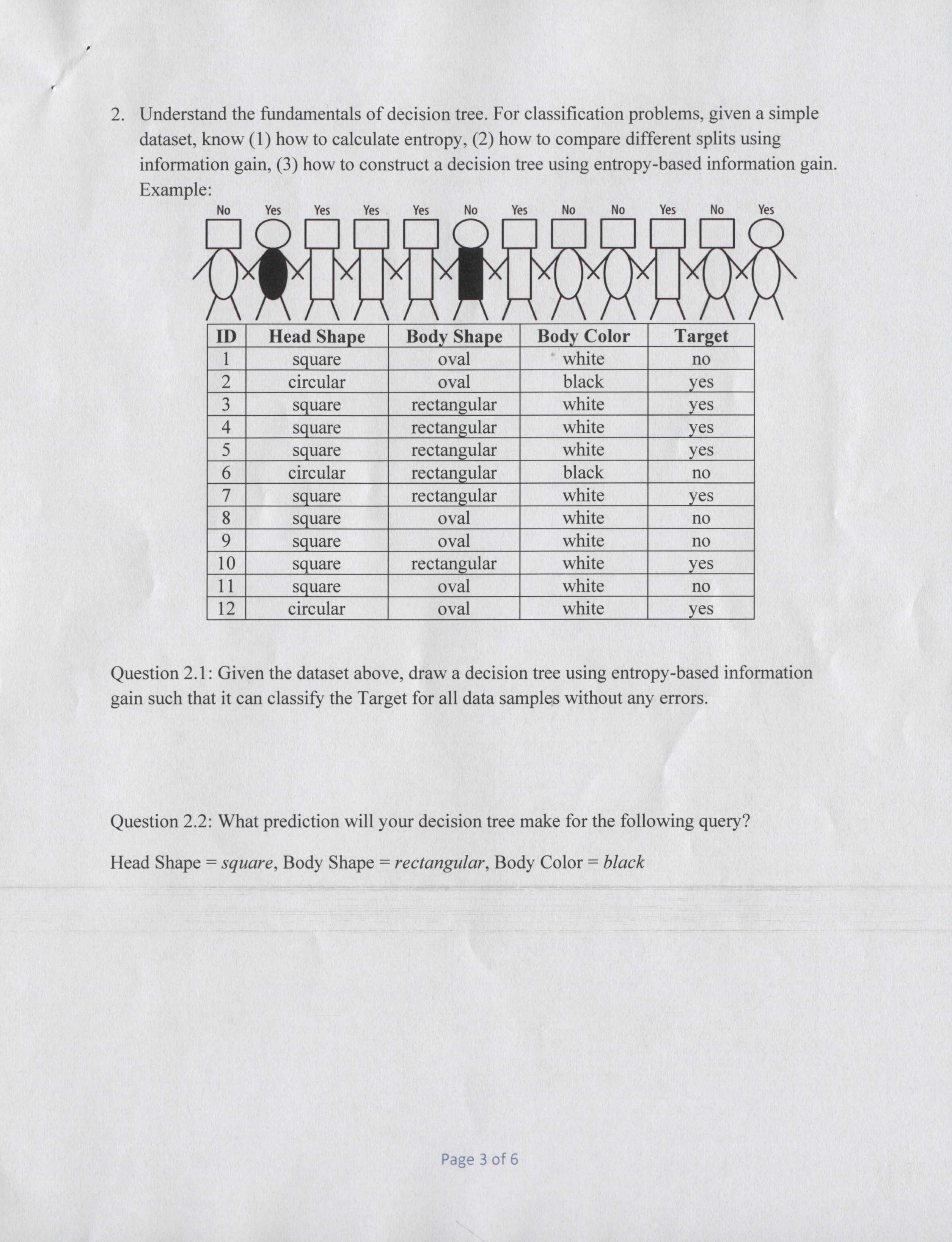

Understand the fundamentals of decision tree. For classification problems, given a simple

dataset, know how to calculate entropy, how to compare different splits using

information gain, how to construct a decision tree using entropybased information gain.

Example:

Question : Given the dataset above, draw a decision tree using entropybased information

gain such that it can classify the Target for all data samples without any errors.

Question : What prediction will your decision tree make for the following query?

Head Shape square, Body Shape rectangular, Body Color black Understand the concept of bootstrappingbagging and how ensemble learning work. Given a

simple dataset, know how to construct a random forest model.

Practice question: consider the task of predicting whether a postoperative patient should be sent

to an intensive care unit ICU or to a general ward for recovery. The dataset below contains the

details of six patients in a study. CORE describes the core temperature of the patient which can

be low or high and STABLE describes whether the patient's current temperature is stable true

or false The target feature in this domain, DECISION, records the decision of whether the

patient is sent to the icu or to a general ward gen for recovery. The goal is to build a random

forest model for this postoperative patient routing task.

Question : the three tables below list three bootstrap samples that have been generated from

the above dataset. Using these bootstrap samples create the decision trees that will be in the

random forest model use entropybased information gain as the feature selection criterion

Bootstrap Sample A:

Bootstrap Sample B:

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock