Question: >>> using FEEDFORWARD NEURAL NETWORKS calculation method: Fully Connected Neural (FCN) Networks hidden layer input layer hBias, = 0.13 output layer in Weight = 0.01

>>> using FEEDFORWARD NEURAL NETWORKS calculation method:

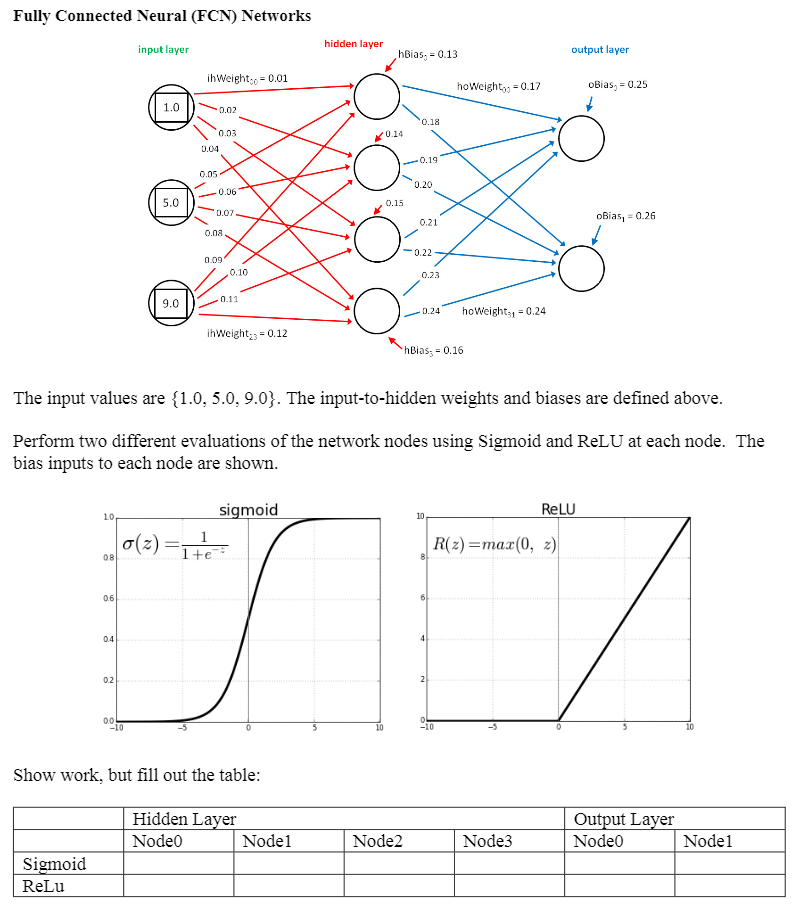

Fully Connected Neural (FCN) Networks hidden layer input layer hBias, = 0.13 output layer in Weight = 0.01 ho Weight = 0.17 oBias, = 0.25 1.0 0.02 0.18 0.14 0.03 0.04 -0.19 0.05 0.20 0.06 5.0 0.15 0.07 0.21 oBias, = 0.26 0.08 o -0.22 0.09 0.10 0.11 9.0 0.24 ho Weight = 0.24 in Weight = 0.12 hBias; = 0.16 The input values are {1.0, 5.0.9.0}. The input-to-hidden weights and biases are defined above. Perform two different evaluations of the network nodes using Sigmoid and ReLU at each node. The bias inputs to each node are shown. ReLU 10 10 sigmoid o(z)=ite R(z) =max(0, 2) 0.8 0.6 0.4 0.2 00 -10 10 -10 10 Show work, but fill out the table: Hidden Layer Nodeo Node1 Node2 Node3 Output Layer Nodeo Node1 Sigmoid ReLu Fully Connected Neural (FCN) Networks hidden layer input layer hBias, = 0.13 output layer in Weight = 0.01 ho Weight = 0.17 oBias, = 0.25 1.0 0.02 0.18 0.14 0.03 0.04 -0.19 0.05 0.20 0.06 5.0 0.15 0.07 0.21 oBias, = 0.26 0.08 o -0.22 0.09 0.10 0.11 9.0 0.24 ho Weight = 0.24 in Weight = 0.12 hBias; = 0.16 The input values are {1.0, 5.0.9.0}. The input-to-hidden weights and biases are defined above. Perform two different evaluations of the network nodes using Sigmoid and ReLU at each node. The bias inputs to each node are shown. ReLU 10 10 sigmoid o(z)=ite R(z) =max(0, 2) 0.8 0.6 0.4 0.2 00 -10 10 -10 10 Show work, but fill out the table: Hidden Layer Nodeo Node1 Node2 Node3 Output Layer Nodeo Node1 Sigmoid ReLu

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts