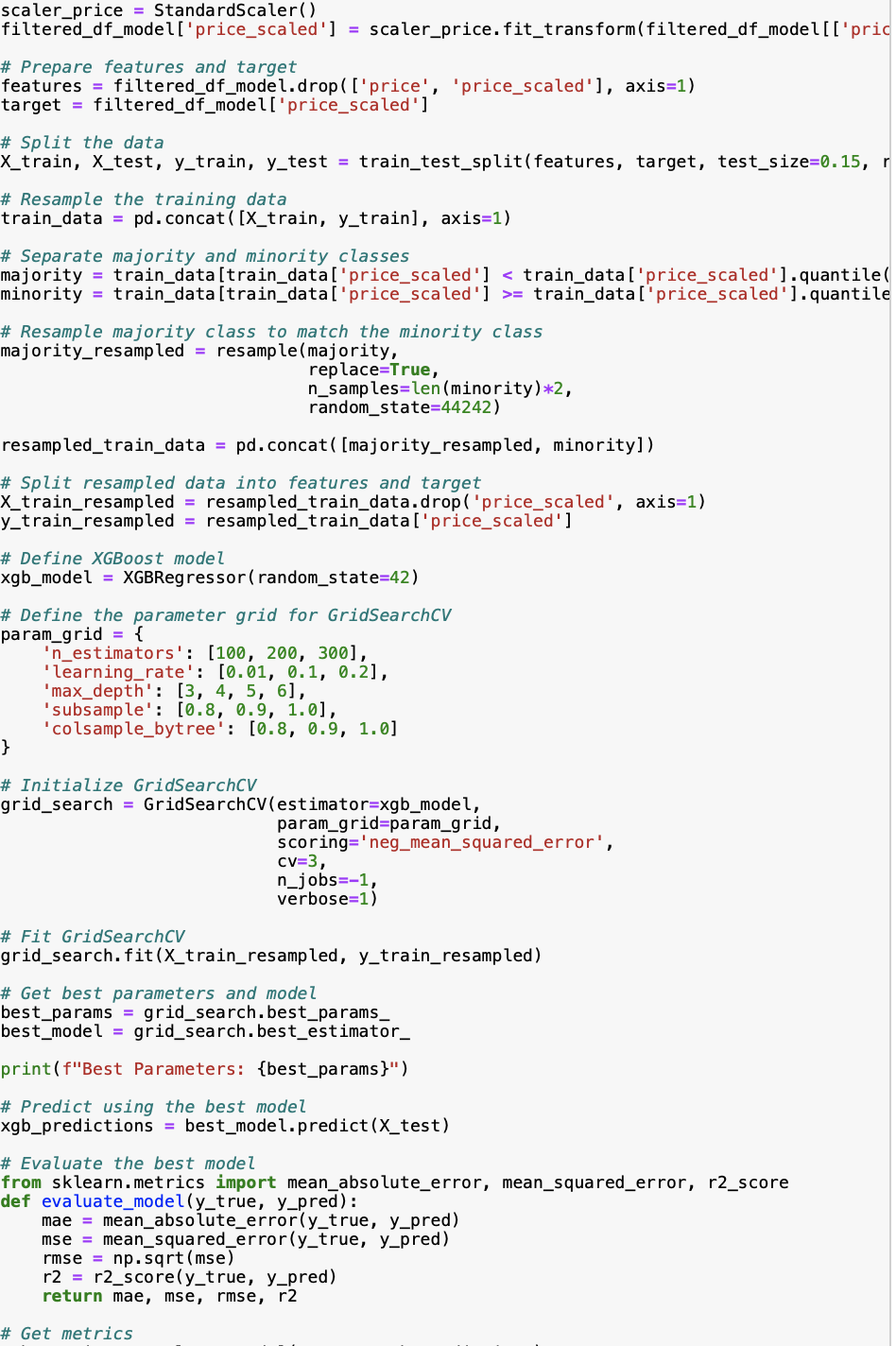

Question: Using Hyperparameter Tuning we want to optimize model performance. Please provide a summary for the code.filtered _ df _ model [ ' price _ scaled'

Using Hyperparameter Tuning we want to optimize model performance. Please provide a summary for the code.filtereddfmodelpricescaled' scalerprice.fittransformfiltereddfmodelpricfeatures filtereddfmodel.dropprice 'pricescaled' axis

target filtereddfmodelpricescaled'Xtrain, Xtest, ytrain, ytest traintestsplitfeatures target, testsize rtraindata pdconcatXtrain, ytrain axismajority traindatatraindatapricescaled' traindatapricescaled'quantile

minority traindatatraindatapricescaled' traindatapricescaled'quantilemajorityresampled resamplemajority nsampleslenminorityresampledtraindata pdconcatmajorityresampled, minorityxtrainresampled resampledtraindata.droppricescaled', axis

ytrainresampled resampledtraindatapricescaled'xgbmodel XGBRegressorrandomstateparamgrid learningrate': 'subsample': gridsearch GridSearchCVestimatorxgbmodel, scoring'negmeansquarederror', njobsFit GridSearchCV

gridsearch.fitXtrainresampled, ytrainresampledbestparams gridsearch.bestparams

bestmodel gridsearch.bestestimator

printfBest Parameters: bestparamsxgbpredictions bestmodel.predictXtestfrom sklearn.metrics import meanabsoluteerror, meansquarederror, rscore

def evaluatemodelytrue, ypred:mse meansquarederrorytrue, ypredr rscoreytrue, ypred#Get metrics

xgbmetrics evaluatemodelytest, xgbpredictions

printfXGBoost Regressor: MAExgbmetrics:f MSExgbmetrics:f RMSExgbmetrics:f Rxgbmetrics:f

#Result printed: fitting folds for each of candidates, totalling fits

Best Parameters: colsamplebytree': 'learningrate': 'maxdepth': nestimators': 'subsample':

XGBoost Regressor: MAE MSE RMSE R

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock