Question: Using the equations discussed in the lecture and slides, prove the backpropagation learning. You can write your work in a piece of paper and take

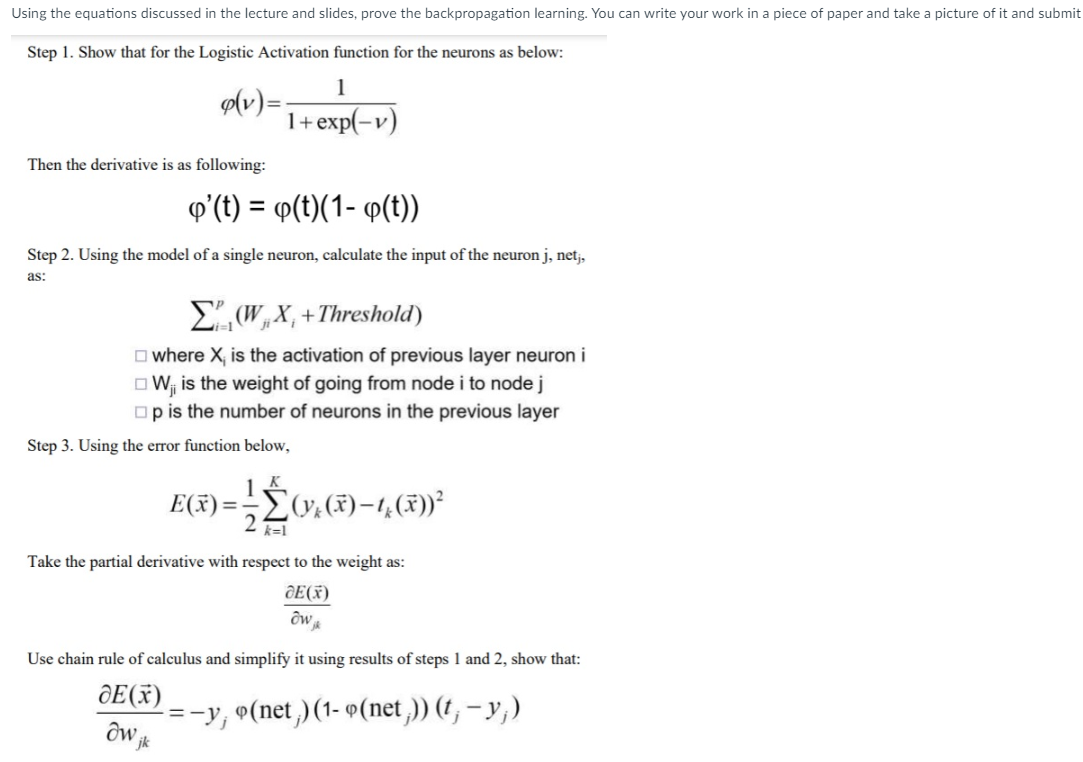

Using the equations discussed in the lecture and slides, prove the backpropagation learning. You can write your work in a piece of paper and take a picture of it and submi Step 1. Show that for the Logistic Activation function for the neurons as below: (v)=1+exp(v)1 Then the derivative is as following: (t)=(t)(1(t)) Step 2. Using the model of a single neuron, calculate the input of the neuron j, net jj, as: i=1p(WjiXi+Threshold) where Xi is the activation of previous layer neuron i Wji is the weight of going from node i to node j p is the number of neurons in the previous layer Step 3. Using the error function below, E(x)=21k=1K(yk(x)tk(x))2 Take the partial derivative with respect to the weight as: wjkE(x) Use chain rule of calculus and simplify it using results of steps 1 and 2 , show that: wjkE(x)=yj(netj)(1(netj))(tjyj)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts