Question: V*+1(s) + max [T(s,a, s') (R(s,a, s') + V(s')] V*(s) = max [T(s, a, s') (R(s, a, s') + xV*(s')] s' Consider an MDP (S,

![V*+1(s) + max [T(s,a, s') (R(s,a, s') + V(s')] V*(s) =](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f515648a27c_82866f5156429c3f.jpg)

![max [T(s, a, s') (R(s, a, s') + xV*(s')] s' Consider an](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f51565121d0_82866f51564b1f06.jpg)

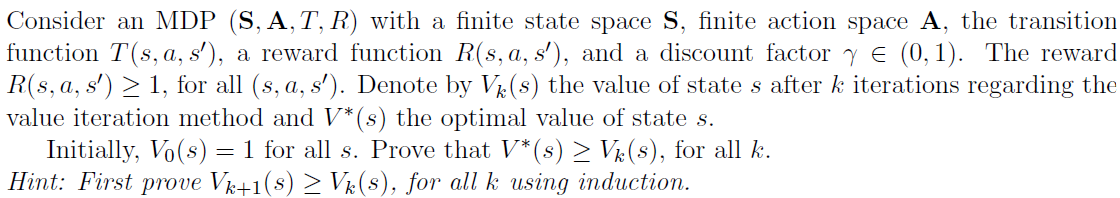

V*+1(s) + max [T(s,a, s') (R(s,a, s') + V(s')] V*(s) = max [T(s, a, s') (R(s, a, s') + xV*(s')] s' Consider an MDP (S, A, T, R) with a finite state space S, finite action space A, the transition function T(s, a, s'), a reward function R(s, a, s'), and a discount factor y E (0,1). The reward R(s, a, s') > 1, for all (s, a, s'). Denote by Vk(s) the value of state s after k iterations regarding the value iteration method and V*(s) the optimal value of state s. Initially, V.(s) = 1 for all s. Prove that V*(s) > Vk(s), for all k. Hint: First prove Vk+1(s) > Vk(s), for all k using induction. V*+1(s) + max [T(s,a, s') (R(s,a, s') + V(s')] V*(s) = max [T(s, a, s') (R(s, a, s') + xV*(s')] s' Consider an MDP (S, A, T, R) with a finite state space S, finite action space A, the transition function T(s, a, s'), a reward function R(s, a, s'), and a discount factor y E (0,1). The reward R(s, a, s') > 1, for all (s, a, s'). Denote by Vk(s) the value of state s after k iterations regarding the value iteration method and V*(s) the optimal value of state s. Initially, V.(s) = 1 for all s. Prove that V*(s) > Vk(s), for all k. Hint: First prove Vk+1(s) > Vk(s), for all k using induction

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts