Question: Value Function 1 point possible ( graded ) As above, we are working with the 3 3 grid example with + 1 reward at the

Value Function

point possible graded

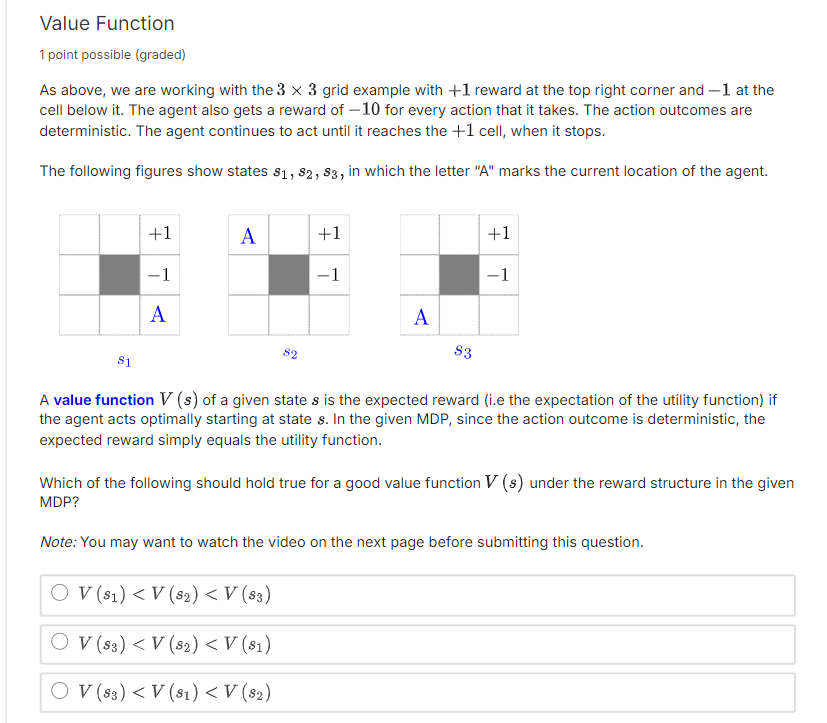

As above, we are working with the grid example with reward at the top right corner and at the

cell below it The agent also gets a reward of for every action that it takes. The action outcomes are

deterministic. The agent continues to act until it reaches the cell, when it stops.

The following figures show states in which the letter A marks the current location of the agent.

A value function of a given state is the expected reward ie the expectation of the utility function if

the agent acts optimally starting at state In the given MDP since the action outcome is deterministic, the

expected reward simply equals the utility function.

Which of the following should hold true for a good value function under the reward structure in the given

MDP

Note: You may want to watch the video on the next page before submitting this question.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock