Question: We are familiar with binary Huffman codes. Input symbols are converted into codewords comprising a sequence of O's and 1's, or bits. In a ternary

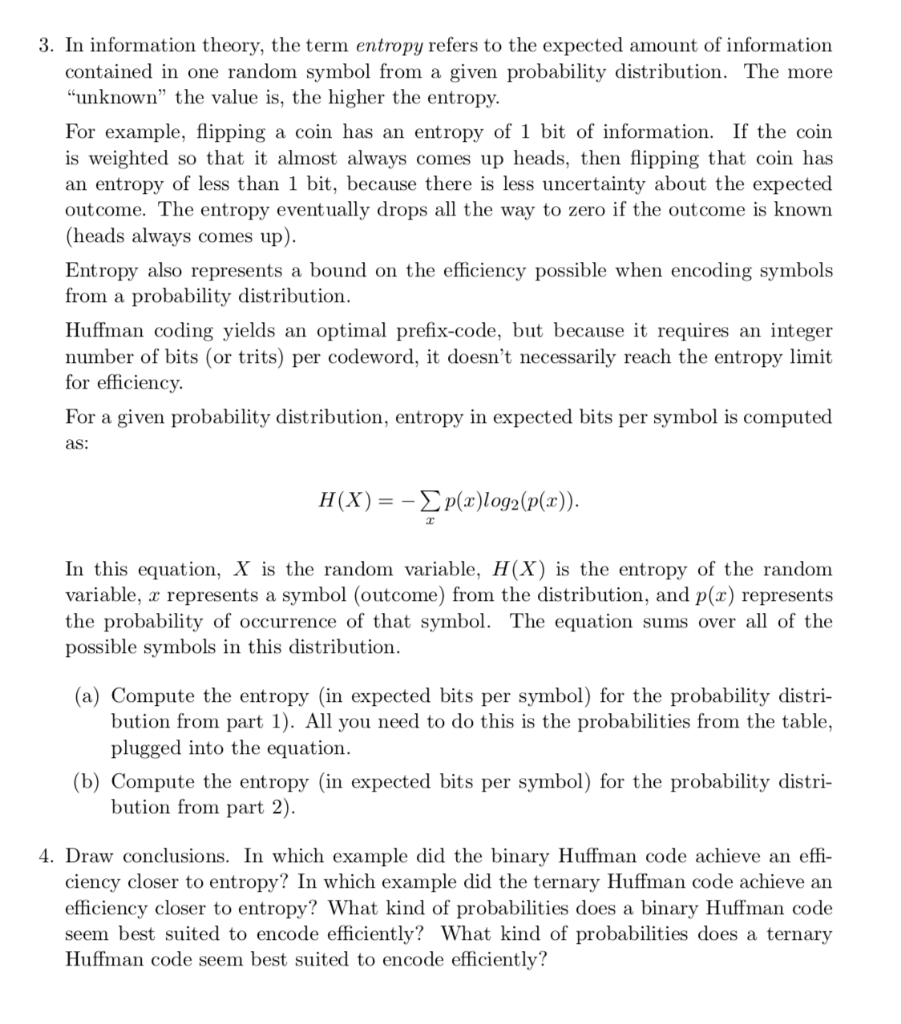

We are familiar with binary Huffman codes. Input symbols are converted into codewords comprising a sequence of O's and 1's, or bits. In a ternary Huffman code, codewords comprise a sequence of trits, each of which can have one of three values: 0,1 or 2. The Huffman coding algorithm to form ternary codewords is the same as for binary codewords except three symbols are combined into a new metasymbol at each step. (To make the combining work out, sometimes an additional O-frequency placeholder symbol is used, but in the examples below that won't be necessary.) 1. Given the following input alphabet and corresponding frequencies (expressed as prob- abilities): Symbol Frequency 0.50 B 0.26 0.12 0.06 E 0.06 D (a) Build the corresponding binary Huffman code. (b) Compute the expected bits per symbol for this binary Huffman code. Remember that this is weighted by the probability of occurrence of each symbol. (c) Build the corresponding ternary Huffman code. (d) Compute the expected trits per symbol for this ternary Huffman code. (e) Convert the expected trits to expected bits per symbol by multiplying your previous answer by 1.585. 2. Repeat the same 5 steps from part 1) with the following input alphabet and corre- sponding frequencies (expressed as probabilities): Symbol Frequency 0.35 0.35 0.10 0.10 0.10 3. In information theory, the term entropy refers to the expected amount of information contained in one random symbol from a given probability distribution. The more "unknown the value is, the higher the entropy. For example, flipping a coin has an entropy of 1 bit of information. If the coin is weighted so that it almost always comes up heads, then flipping that coin has an entropy of less than 1 bit, because there is less uncertainty about the expected outcome. The entropy eventually drops all the way to zero if the outcome is known (heads always comes up). Entropy also represents a bound on the efficiency possible when encoding symbols from a probability distribution. Huffman coding yields an optimal prefix-code, but because it requires an integer number of bits (or trits) per codeword, it doesn't necessarily reach the entropy limit for efficiency. For a given probability distribution, entropy in expected bits per symbol is computed as: H(X) = - p(x)log2(p(2)). In this equation, X is the random variable, H(X) is the entropy of the random variable, x represents a symbol (outcome) from the distribution, and p(x) represents the probability of occurrence of that symbol. The equation sums over all of the possible symbols in this distribution. (a) Compute the entropy (in expected bits per symbol) for the probability distri- bution from part 1). All you need to do this is the probabilities from the table, plugged into the equation. (b) Compute the entropy (in expected bits per symbol) for the probability distri- bution from part 2). 4. Draw conclusions. In which example did the binary Huffman code achieve an effi- ciency closer to entropy? In which example did the ternary Huffman code achieve an efficiency closer to entropy? What kind of probabilities does a binary Huffman code seem best suited to encode efficiently? What kind of probabilities does a ternary Huffman code seem best suited to encode efficiently? We are familiar with binary Huffman codes. Input symbols are converted into codewords comprising a sequence of O's and 1's, or bits. In a ternary Huffman code, codewords comprise a sequence of trits, each of which can have one of three values: 0,1 or 2. The Huffman coding algorithm to form ternary codewords is the same as for binary codewords except three symbols are combined into a new metasymbol at each step. (To make the combining work out, sometimes an additional O-frequency placeholder symbol is used, but in the examples below that won't be necessary.) 1. Given the following input alphabet and corresponding frequencies (expressed as prob- abilities): Symbol Frequency 0.50 B 0.26 0.12 0.06 E 0.06 D (a) Build the corresponding binary Huffman code. (b) Compute the expected bits per symbol for this binary Huffman code. Remember that this is weighted by the probability of occurrence of each symbol. (c) Build the corresponding ternary Huffman code. (d) Compute the expected trits per symbol for this ternary Huffman code. (e) Convert the expected trits to expected bits per symbol by multiplying your previous answer by 1.585. 2. Repeat the same 5 steps from part 1) with the following input alphabet and corre- sponding frequencies (expressed as probabilities): Symbol Frequency 0.35 0.35 0.10 0.10 0.10 3. In information theory, the term entropy refers to the expected amount of information contained in one random symbol from a given probability distribution. The more "unknown the value is, the higher the entropy. For example, flipping a coin has an entropy of 1 bit of information. If the coin is weighted so that it almost always comes up heads, then flipping that coin has an entropy of less than 1 bit, because there is less uncertainty about the expected outcome. The entropy eventually drops all the way to zero if the outcome is known (heads always comes up). Entropy also represents a bound on the efficiency possible when encoding symbols from a probability distribution. Huffman coding yields an optimal prefix-code, but because it requires an integer number of bits (or trits) per codeword, it doesn't necessarily reach the entropy limit for efficiency. For a given probability distribution, entropy in expected bits per symbol is computed as: H(X) = - p(x)log2(p(2)). In this equation, X is the random variable, H(X) is the entropy of the random variable, x represents a symbol (outcome) from the distribution, and p(x) represents the probability of occurrence of that symbol. The equation sums over all of the possible symbols in this distribution. (a) Compute the entropy (in expected bits per symbol) for the probability distri- bution from part 1). All you need to do this is the probabilities from the table, plugged into the equation. (b) Compute the entropy (in expected bits per symbol) for the probability distri- bution from part 2). 4. Draw conclusions. In which example did the binary Huffman code achieve an effi- ciency closer to entropy? In which example did the ternary Huffman code achieve an efficiency closer to entropy? What kind of probabilities does a binary Huffman code seem best suited to encode efficiently? What kind of probabilities does a ternary Huffman code seem best suited to encode efficiently

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts