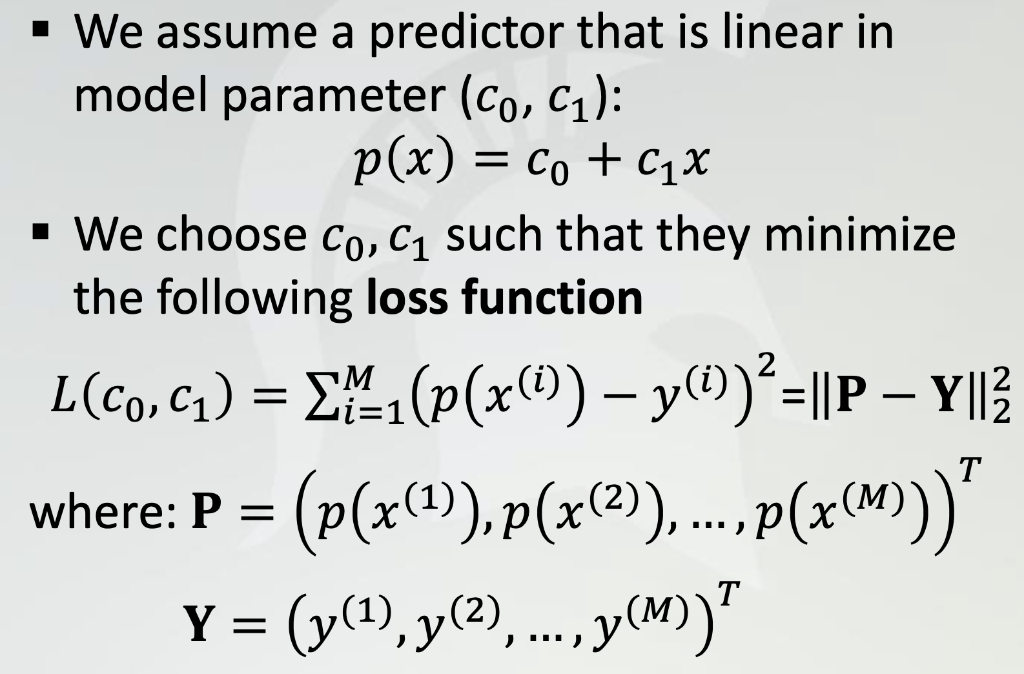

Question: We assume a predictor that is linear in model parameter (co, C): p(x) = Co + cix We choose Co, C such that they minimize

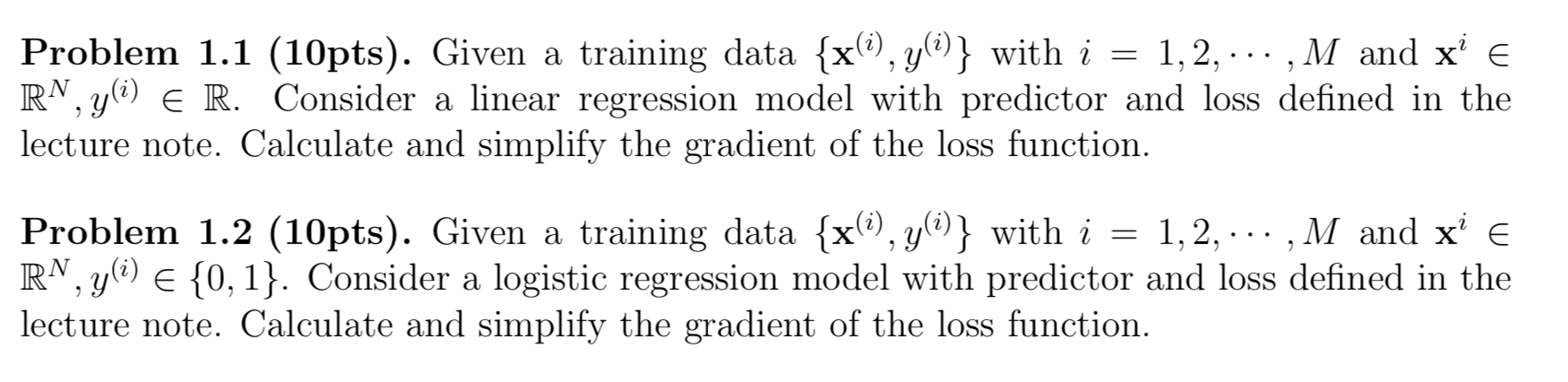

We assume a predictor that is linear in model parameter (co, C): p(x) = Co + cix We choose Co, C such that they minimize the following loss function L(C0, C1) = 24.(p(x(i)) y(i))?=||P Y||3 where: P = (p(x(1)), p(x(2)),..,P(x(M)))" Y = (y(1), y(2), ..., y(M))" Problem 1.1 (10pts). Given a training data (x(i), y(i)} with i = 1, 2, ... ,M and xi e RN, y() E R. Consider a linear regression model with predictor and loss defined in the lecture note. Calculate and simplify the gradient of the loss function. Problem 1.2 (10pts). Given a training data (x(i),y(i)} with i = 1,2, ... ,M and xi e RN, y(i) E {0,1}. Consider a logistic regression model with predictor and loss defined in the lecture note. Calculate and simplify the gradient of the loss function. We assume a predictor that is linear in model parameter (co, C): p(x) = Co + cix We choose Co, C such that they minimize the following loss function L(C0, C1) = 24.(p(x(i)) y(i))?=||P Y||3 where: P = (p(x(1)), p(x(2)),..,P(x(M)))" Y = (y(1), y(2), ..., y(M))" Problem 1.1 (10pts). Given a training data (x(i), y(i)} with i = 1, 2, ... ,M and xi e RN, y() E R. Consider a linear regression model with predictor and loss defined in the lecture note. Calculate and simplify the gradient of the loss function. Problem 1.2 (10pts). Given a training data (x(i),y(i)} with i = 1,2, ... ,M and xi e RN, y(i) E {0,1}. Consider a logistic regression model with predictor and loss defined in the lecture note. Calculate and simplify the gradient of the loss function

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts