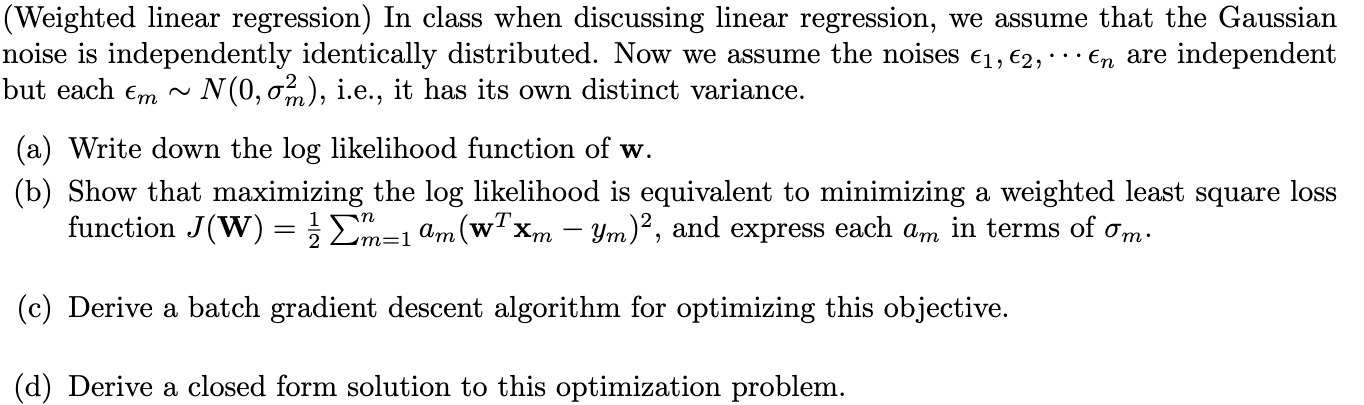

Question: (Weighted linear regression) In class when discussing linear regression, we assume that the Gaussian noise is independently identically distributed. Now we assume the noises 61,62,

(Weighted linear regression) In class when discussing linear regression, we assume that the Gaussian noise is independently identically distributed. Now we assume the noises 61,62, - - men are independent but each em m N (0, 0,21%), i.e., it has its own distinct variance. (a) Write down the log likelihood function of w. (b) Show that maximizing the log likelihood is equivalent to minimizing a weighted least square loss function J (W) = % 22:1 am (wam gm)2, and express each am in terms of am. (e) Derive a batch gradient descent algorithm for optimizing this objective. (cl) Derive a closed form solution to this optimization

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts