Question: What are possible reasons for a Spark executor running into an OutOfMemoryException? Spark.memoryOverhead is set too low. Running operations against an improperly distributed dataset. Merge

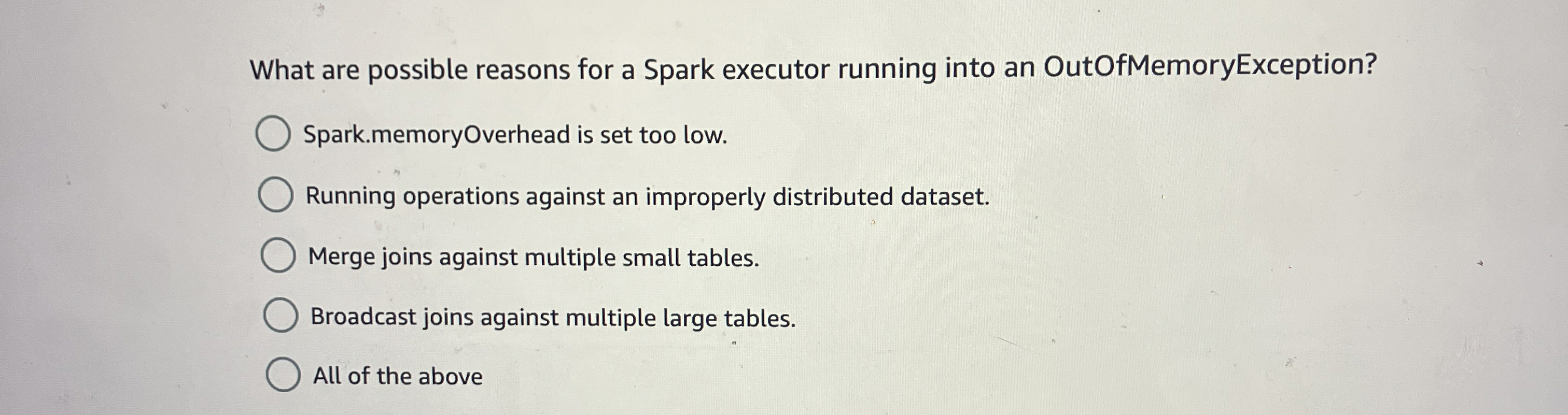

What are possible reasons for a Spark executor running into an OutOfMemoryException?

Spark.memoryOverhead is set too low.

Running operations against an improperly distributed dataset.

Merge joins against multiple small tables.

Broadcast joins against multiple large tables.

All of the above

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock