Question: What is the total sample count N ? What are the maximum likelihood estimates for the probabilities of each outcome? Now, use Laplace smoothing with

What is the total sample count N?

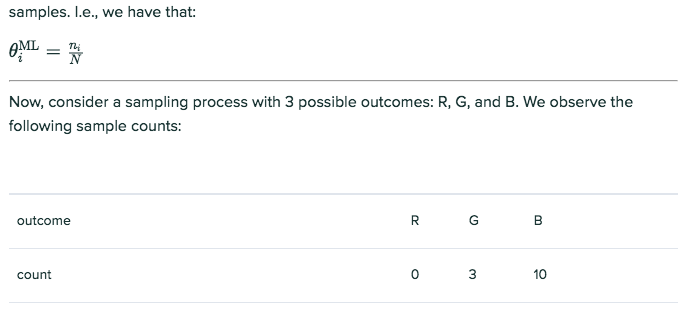

What are the maximum likelihood estimates for the probabilities of each outcome?

Now, use Laplace smoothing with strength k = 4k=4 to estimate the probabilities of each outcome.

Now, consider Laplace smoothing in the limit k ightarrow \inftyk. Fill in the corresponding probability estimates.

We will begin with a short derivation. Consider a probability distribution with a domain that consists of X| different values. We get to observe N total samples from this distribution. We use ni to represent the number of the N samples for which outcome i occurs. Our goal is to estimate the probabilities 0, for each of the events i 1,2..X] - 1. The probability of the , equals 1-1-1 , last outcome, In maximum likelihood estimation, we choose the that maximize the likelihood of the observed samples L(samples, ) x (1-81-82-...-6x1-1)" TL X For this derivation, it is easiest to work with the log of the likelihood. Maximizing log-likelihood also maximizes likelihood, since the quantities are related by a monotonic transformation Taking logs we obtain Setting derivatives with respect to equal to zero, we obtain X-1 equations in the -1 unknowns, '91 , . . . , |x1-1 : XI-1 Multiplying by (1--B2-...-9,x-1) makes the original X-1 nonlinear equations into IX-1 linear equations ML ML That is, the maximum likelihood estimate of 6 can be found by solving a linear system of X-1 equations in l K-1 unknowns. Doing so shows that the maximum likelihood estimate corresponds to simply the count for each outcome divided by the total number of We will begin with a short derivation. Consider a probability distribution with a domain that consists of X| different values. We get to observe N total samples from this distribution. We use ni to represent the number of the N samples for which outcome i occurs. Our goal is to estimate the probabilities 0, for each of the events i 1,2..X] - 1. The probability of the , equals 1-1-1 , last outcome, In maximum likelihood estimation, we choose the that maximize the likelihood of the observed samples L(samples, ) x (1-81-82-...-6x1-1)" TL X For this derivation, it is easiest to work with the log of the likelihood. Maximizing log-likelihood also maximizes likelihood, since the quantities are related by a monotonic transformation Taking logs we obtain Setting derivatives with respect to equal to zero, we obtain X-1 equations in the -1 unknowns, '91 , . . . , |x1-1 : XI-1 Multiplying by (1--B2-...-9,x-1) makes the original X-1 nonlinear equations into IX-1 linear equations ML ML That is, the maximum likelihood estimate of 6 can be found by solving a linear system of X-1 equations in l K-1 unknowns. Doing so shows that the maximum likelihood estimate corresponds to simply the count for each outcome divided by the total number of

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts