Question: When approaching these problems how would we go after 1,2,3 Problem 1. [20 points] Consider the AdaBoost algorithm we discussed in the class. AdaBoost is

When approaching these problems how would we go after 1,2,3

![[20 points] Consider the AdaBoost algorithm we discussed in the class. AdaBoost](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/10/6703fbcdcaaaa_3176703fbcdaa2d3.jpg)

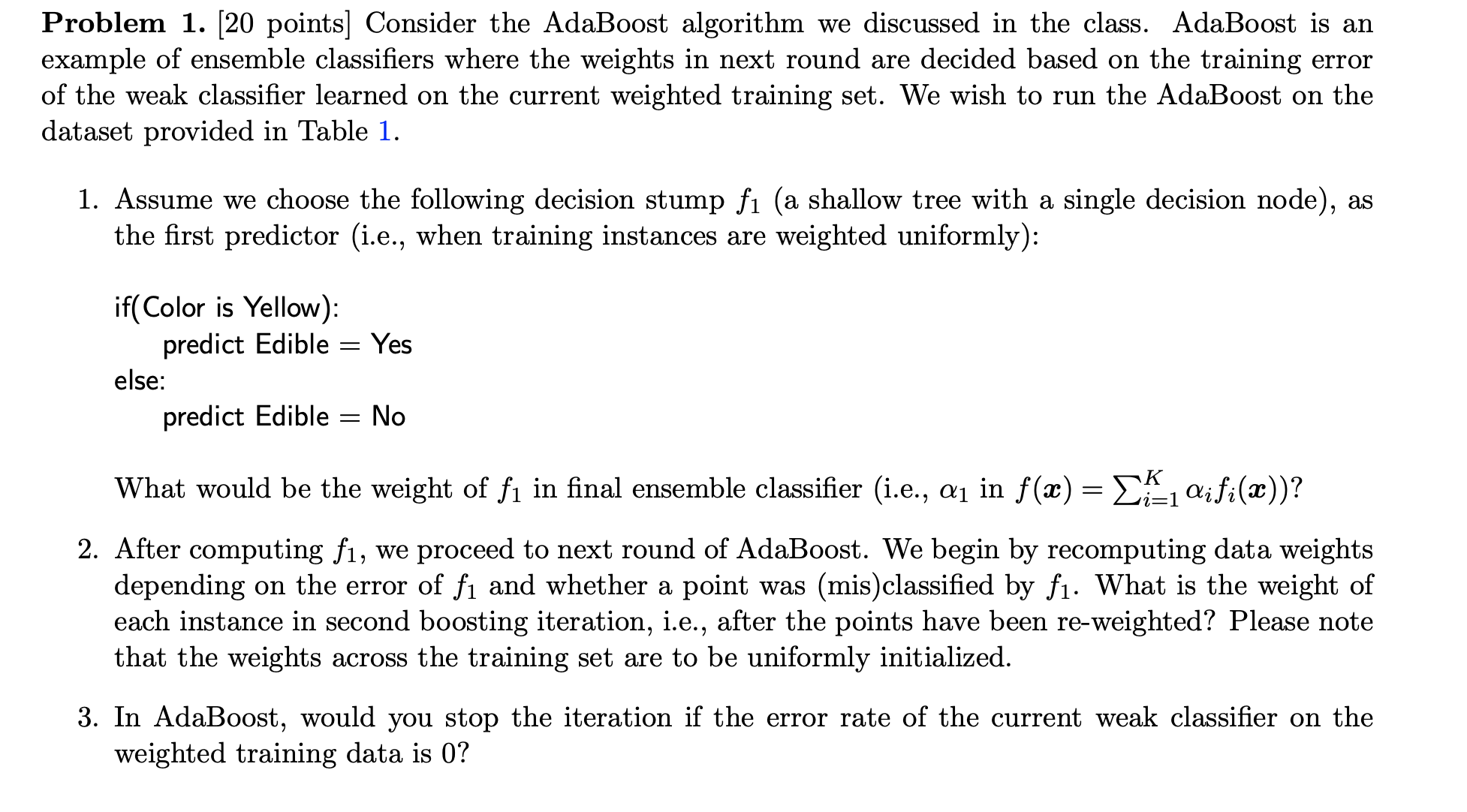

Problem 1. [20 points] Consider the AdaBoost algorithm we discussed in the class. AdaBoost is an example of ensemble classifiers where the weights in next round are decided based on the training error of the weak classifier learned on the current weighted training set. We wish to run the AdaBoost on the dataset provided in Table 1. 1. Assume we choose the following decision stump f1 (a shallow tree with a single decision node), as the first predictor (i.e., when training instances are weighted uniformly): if( Color is Yellow): predict Edible = Yes else: predict Edible = No What would be the weight of f1 in final ensemble classifier (i.e., al in f(x) = En, aifi(x))? 2. After computing f1, we proceed to next round of AdaBoost. We begin by recomputing data weights depending on the error of f1 and whether a point was (mis)classified by f1. What is the weight of each instance in second boosting iteration, i.e., after the points have been re-weighted? Please note that the weights across the training set are to be uniformly initialized. 3. In AdaBoost, would you stop the iteration if the error rate of the current weak classifier on the weighted training data is 0?Instance Color Size Shape Edible D1 Yellow Small Round Yes D2 Yellow Small Round No D3 Green Small Irregular Yes D4 Green Large Irregular No D5 Yellow Large Round Yes D6 Yellow Small Round Yes D7 Yellow Small Round Yes D8 Yellow Small Round Yes D9 Green Small Round No D10 Yellow Large Round No D11 Yellow Large Round Yes D12 Yellow Large Round No D13 Yellow Large Round No D14 Yellow Large Round No D15 Yellow Small Irregular Yes D16 Yellow Large Irregular Yes Table 1: Mushroom data with 16 instances, three categorical features, and binary labels

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts