Question: When making an API request to the GPT - 3 model, considering that the maximum number of tokens that can be generated is 2 0

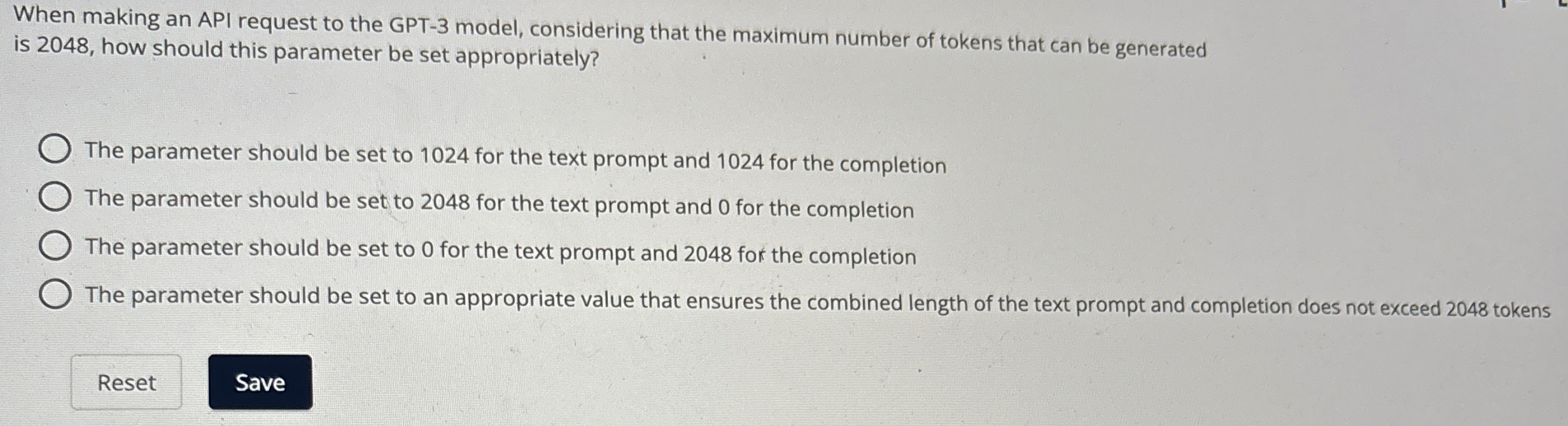

When making an API request to the GPT model, considering that the maximum number of tokens that can be generated is how should this parameter be set appropriately?

The parameter should be set to for the text prompt and for the completion

The parameter should be set to for the text prompt and for the completion

The parameter should be set to for the text prompt and for the completion

The parameter should be set to an appropriate value that ensures the combined length of the text prompt and completion does not exceed tokens

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock