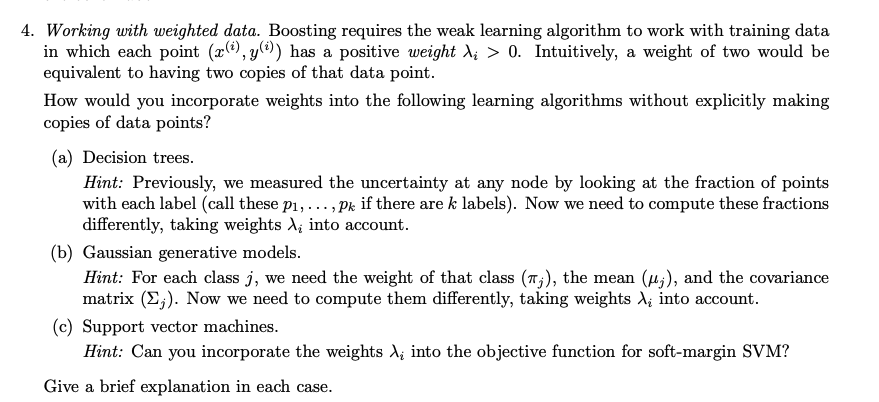

Question: Working with weighted data. Boosting requires the weak learning algorithm to work with training data in which each point ( x ( i ) ,

Working with weighted data. Boosting requires the weak learning algorithm to work with training data

in which each point has a positive weight Intuitively, a weight of two would be

equivalent to having two copies of that data point.

How would you incorporate weights into the following learning algorithms without explicitly making

copies of data points?

a Decision trees.

Hint: Previously, we measured the uncertainty at any node by looking at the fraction of points

with each label call these dots, if there are labels Now we need to compute these fractions

differently, taking weights into account.

b Gaussian generative models.

Hint: For each class we need the weight of that class the mean and the covariance

matrix Now we need to compute them differently, taking weights into account.

c Support vector machines.

Hint: Can you incorporate the weights into the objective function for softmargin SVM

Give a brief explanation in each case.Original Data: Boosting Round : Boosting Round : Boosting Round : a Training records chosen during boosting. b Weights of training records. Also, we know that the split points for the decision stump are and for Round and respectively. Calculate the Adaboost training error rate and the importance of base llassifier C C and CHint: See Slide points

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock