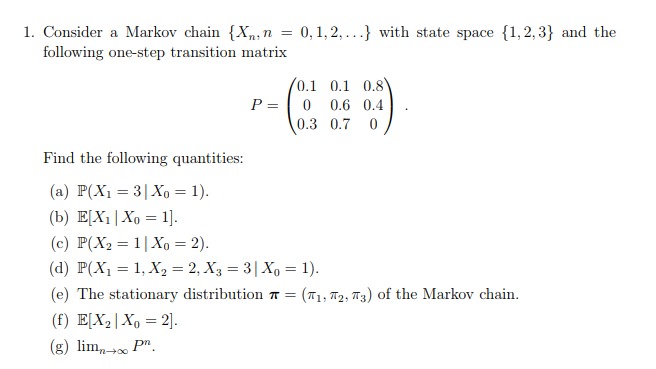

Question: workings needed 1. Consider a Markov chain {X,, n = 0, 1,2, ...} with state space {1, 2,3} and the following one-step transition matrix 0.1

workings needed

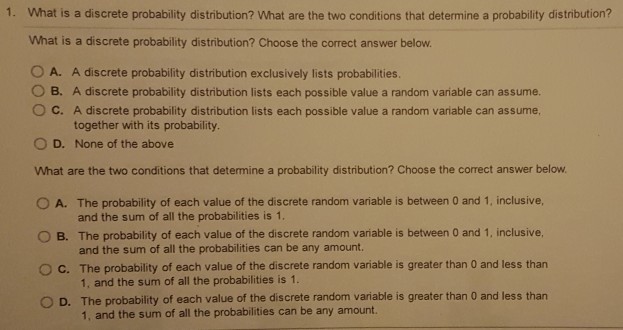

1. Consider a Markov chain {X,, n = 0, 1,2, ...} with state space {1, 2,3} and the following one-step transition matrix 0.1 0.1 0.8 P = 0 0.6 0.4 0.3 0.7 0 Find the following quantities: (a) P(X1 = 3 | Xo = 1). (b) E[Xi | Xo = 1]. (c) P(X2 = 1| Xo = 2). (d) P(X] = 1, X2 = 2, X3 = 3 | Xo = 1). (e) The stationary distribution * = (71, #2, #3) of the Markov chain. (f) E[X2 | Xo = 2]- (g) lim,-+ P.1. What is a discrete probability distribution? What are the two conditions that determine a probability distribution? What is a discrete probability distribution? Choose the correct answer below. O A. A discrete probability distribution exclusively lists probabilities. O B. A discrete probability distribution lists each possible value a random variable can assume. O C. A discrete probability distribution lists each possible value a random variable can assume, together with its probability. O D. None of the above What are the two conditions that determine a probability distribution? Choose the correct answer below. O A. The probability of each value of the discrete random variable is between 0 and 1, inclusive, and the sum of all the probabilities is 1. O B. The probability of each value of the discrete random variable is between 0 and 1, inclusive, and the sum of all the probabilities can be any amount. O C. The probability of each value of the discrete random variable is greater than 0 and less than 1, and the sum of all the probabilities is 1. O D. The probability of each value of the discrete random variable is greater than 0 and less than 1, and the sum of all the probabilities can be any amount

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts