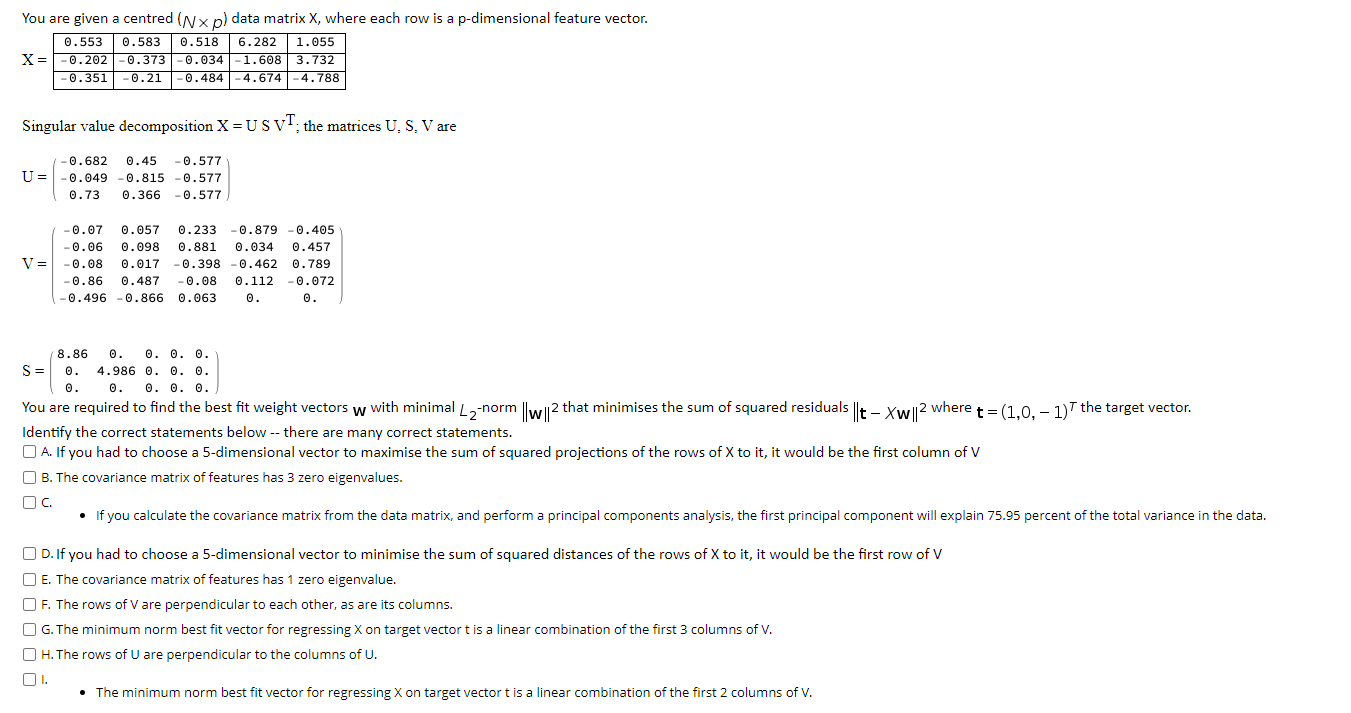

Question: You are given a centred (Nxp) data matrix X, where each row is a p-dimensional feature vector. 0.553 0.583 0.518 6.282 X=-0.202 -0.373 -0.034 -1.608

You are given a centred (Nxp) data matrix X, where each row is a p-dimensional feature vector. 0.553 0.583 0.518 6.282 X=-0.202 -0.373 -0.034 -1.608 3.732 -0.351 -0.21 -0.484 -4.674 -4.788 1.055 Singular value decomposition X = US VT, the matrices U, S. V are -0.682 U= -0.049 0.45 -0.815 0.366 -0.577 -0.577 -0.577 0.73 V = -0.07 0.057 -0.06 0.098 -0.08 0.017 -0.86 0.487 -0.496 -0.866 0.233 0.881 -0.398 -0.08 0.063 -0.879 0.034 -0.462 0.112 0. -0.405 0.457 0.789 -0.072 0. 8.86 0. 0. 0. 0. S = 0. 4.986 0 0.0. 0. 0.0.0 You are required to find the best fit weight vectors w with minimal Lz-norm || w || 2 that minimises the sum of squared residuals ||t - Xw || 2 where t = (1,0, - 1) the target vector. Identify the correct statements below -- there are many correct statements. A. If you had to choose a 5-dimensional vector to maximise the sum of squared projections of the rows of X to it, it would be the first column of V B. The covariance matrix of features has 3 zero eigenvalues. O c. If you calculate the covariance matrix from the data matrix, and perform a principal components analysis, the first principal component will explain 75.95 percent of the total variance in the data. OD. If you had to choose a 5-dimensional vector to minimise the sum of squared distances of the rows of X to it, it would be the first row of V E. The covariance matrix of features has 1 zero eigenvalue. OF. The rows of V are perpendicular to each other, as are its columns. OG. The minimum norm best fit vector for regressing X on target vectort is a linear combination of the first 3 columns of V. H. The rows of U are perpendicular to the columns of U. I. The minimum norm best fit vector for regressing X on target vectort is a linear combination of the first 2 columns of V. You are given a centred (Nxp) data matrix X, where each row is a p-dimensional feature vector. 0.553 0.583 0.518 6.282 X=-0.202 -0.373 -0.034 -1.608 3.732 -0.351 -0.21 -0.484 -4.674 -4.788 1.055 Singular value decomposition X = US VT, the matrices U, S. V are -0.682 U= -0.049 0.45 -0.815 0.366 -0.577 -0.577 -0.577 0.73 V = -0.07 0.057 -0.06 0.098 -0.08 0.017 -0.86 0.487 -0.496 -0.866 0.233 0.881 -0.398 -0.08 0.063 -0.879 0.034 -0.462 0.112 0. -0.405 0.457 0.789 -0.072 0. 8.86 0. 0. 0. 0. S = 0. 4.986 0 0.0. 0. 0.0.0 You are required to find the best fit weight vectors w with minimal Lz-norm || w || 2 that minimises the sum of squared residuals ||t - Xw || 2 where t = (1,0, - 1) the target vector. Identify the correct statements below -- there are many correct statements. A. If you had to choose a 5-dimensional vector to maximise the sum of squared projections of the rows of X to it, it would be the first column of V B. The covariance matrix of features has 3 zero eigenvalues. O c. If you calculate the covariance matrix from the data matrix, and perform a principal components analysis, the first principal component will explain 75.95 percent of the total variance in the data. OD. If you had to choose a 5-dimensional vector to minimise the sum of squared distances of the rows of X to it, it would be the first row of V E. The covariance matrix of features has 1 zero eigenvalue. OF. The rows of V are perpendicular to each other, as are its columns. OG. The minimum norm best fit vector for regressing X on target vectort is a linear combination of the first 3 columns of V. H. The rows of U are perpendicular to the columns of U. I. The minimum norm best fit vector for regressing X on target vectort is a linear combination of the first 2 columns of V

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts