Suppose that in the stochastic gradient descent method we wish to repeatedly draw minibatches of size (N)

Question:

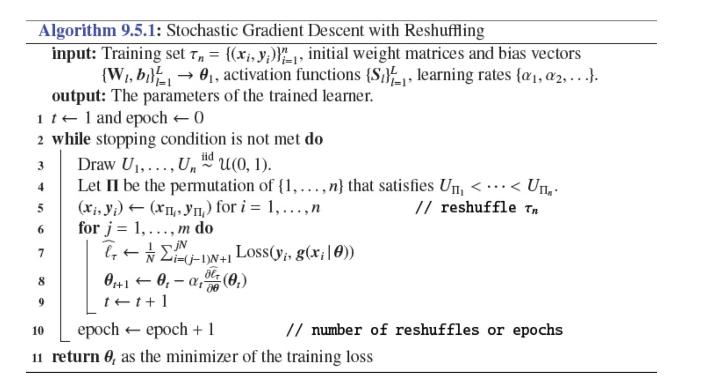

Suppose that in the stochastic gradient descent method we wish to repeatedly draw minibatches of size \(N\) from \(\tau_{n}\) where we assume that \(N \times m=n\) for some large integer \(\mathrm{m}\). Instead of repeatedly resampling from \(\tau_{n}\) an alternative is to reshuffle \(\tau_{n}\) via a random permutation \(\Pi\) and then advance sequentially through the reshuffled training set to construct \(m\) non-overlapping minibatches. A single traversal of such a reshuffled training set is called an epoch. The following pseudo-code describes the procedure.

Write Python code that implements the stochastic gradient descent with data reshuffling, and use it to train the neural net in Section 9.5.1.

Step by Step Answer:

Data Science And Machine Learning Mathematical And Statistical Methods

ISBN: 9781118710852

1st Edition

Authors: Dirk P. Kroese, Thomas Taimre, Radislav Vaisman, Zdravko Botev