Assume that the model y = X + u satisfies the Gauss-Markov assumptions, let G be a

Question:

Assume that the model y = Xβ + u satisfies the Gauss-Markov assumptions, let G be a (k + 1) × (k + 1) nonsingular, nonrandom matrix, and define δ = Gβ, so that d is also a (k + 1) × 1 vector. Let β̂ be the (k + 1) × 1 vector of OLS estimators and define δ̂ = Gβ̂ as the OLS estimator of δ.

(i) Show that E(δ̂|X) = δ.

(ii) Find Var (δ̂|X) in terms of s2, X, and G.

(iii) Use Problem E.3 to verify that δ̂ and the appropriate estimate of Var (δ̂|X) are obtained from the regression of y on XG–1.

(iv) Now, let c be a (k + 1) × 1 vector with at least one nonzero entry. For concreteness, assume that ck ¹ 0. Define θ = c’β, so that θ is a scalar. Define δj = βj, j = 0, 1, . . . , k – 1 and δk = θ.

Show how to define a (k + 1) × (k + 1) nonsingular matrix G so that δ = Gβ.

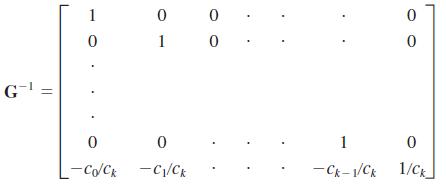

(v) Show that for the choice of G in part (iv),

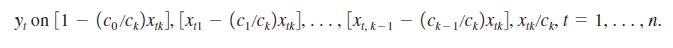

Use this expression for G–1 and part (iii) to conclude that θ̂ and its standard error are obtained as the coefficient on xtk /ck in the regression of

This regression is exactly the one obtained by writing bk in terms of θ̂ and β0, β1, . . . , βk–1, plugging the result into the original model, and rearranging. Therefore, we can formally justify the trick we use throughout the text for obtaining the standard error of a linear combination of parameters.

Step by Step Answer:

Introductory Econometrics A Modern Approach

ISBN: 9781337558860

7th Edition

Authors: Jeffrey Wooldridge