Question: 3.2.5 A Markov chain has the transition probability matrix The Markov chain starts at time zero in state X0 D 0. Let T D minfn

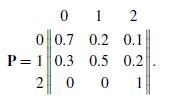

3.2.5 A Markov chain has the transition probability matrix

The Markov chain starts at time zero in state X0 D 0. Let T D minfn 0IXn D 2g be the first time that the process reaches state 2. Eventually, the process will reach and be absorbed into state 2. If in some experiment we observed such a process and noted that absorption had not yet taken place, we might be interested in the conditional probability that the process is in state 0 (or 1), given that absorption had not yet taken place. Determine PrfX3 D 0jX0;T > 3g.

Hint: The event fT > 3g is exactly the same as the event fX3 6D 2g D fX3 D 0g [fX3 D 1g.

012 0 0.7 0.2 0.1 P 1 0.3 0.5 0.2 200 1

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts