Question: 1 #include 2 #include 3 #include 4 #include 5 int main() 6 { 7 int i; 8 int a[10000]; 9 float sum=0; 10 float avg;

1 #include

2 #include

3 #include

4 #include

5 int main()

6 {

7 int i;

8 int a[10000];

9 float sum=0;

10 float avg;

11 float var=0;

12 float sd;

13 srand(time(NULL));

14 for (i=0;i

15 a[i] = rand()%6+1;

16 for (i=0;i

17 sum += a[i];

18 avg = sum / i;

19 var = pow(sum-avg,2)i-1;

20 sd = sqrt(var);

21 printf("The Average of 10000 randomly generated numbers is %.4f ",avg);

22 printf("The Standard Deviation is %.4f ", sd);

23 return 0;

24 }![6 { 7 int i; 8 int a[10000]; 9 float sum=0; 10](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f3ab635e4cf_15466f3ab62c3c38.jpg) the output of standard deviation is wrong, probably the SD and variance formula would be wrong in the code. Request you to help me out with the correct code and its output. SD should be less than average, but its high in my output

the output of standard deviation is wrong, probably the SD and variance formula would be wrong in the code. Request you to help me out with the correct code and its output. SD should be less than average, but its high in my output

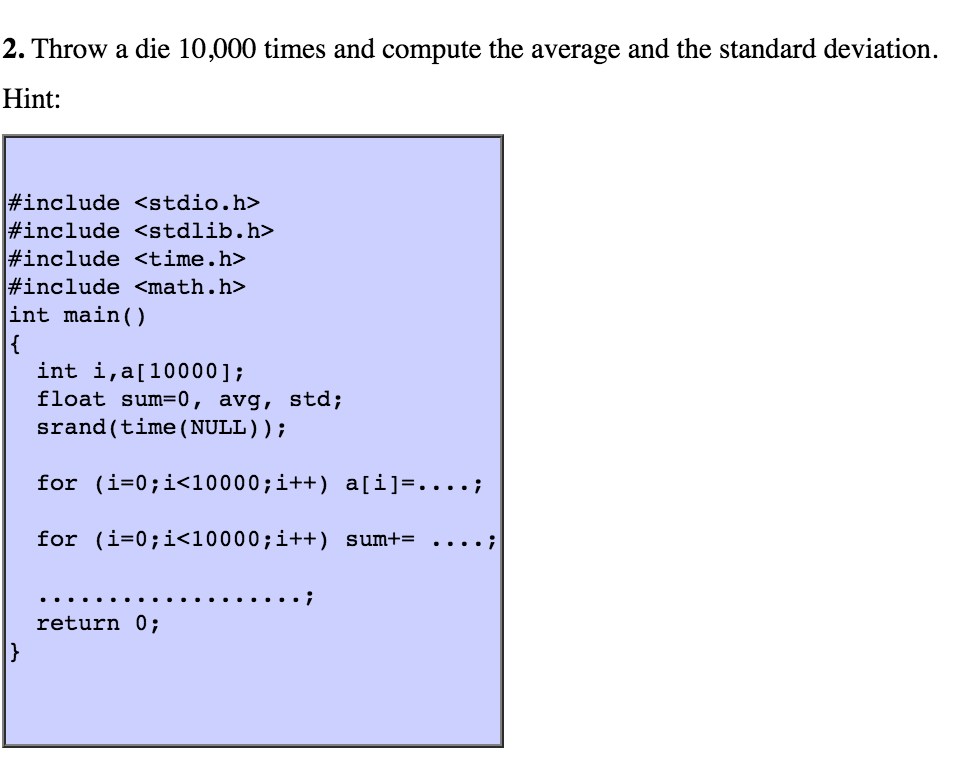

2. Throw a die 10,000 times and compute the average and the standard deviation. Hint: #include

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts