Question: 1. Program in Python or MatLab the gradient descent algorithm using the back- tracking line search described below to find step sizes or. Use them

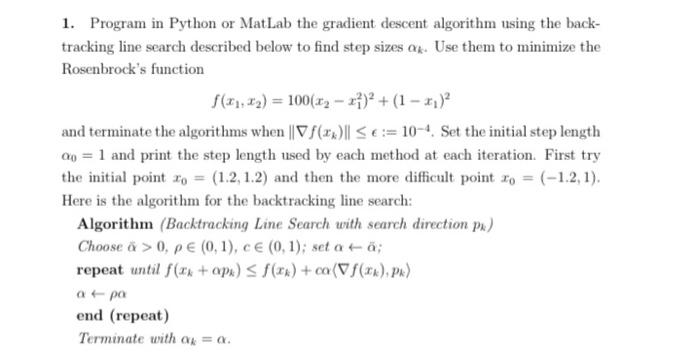

1. Program in Python or MatLab the gradient descent algorithm using the back- tracking line search described below to find step sizes or. Use them to minimize the Rosenbrock's function f(0, 12) = 100(x2 - 2) + (1 - 1) and terminate the algorithms when || f(x)|| Se=10-4. Set the initial step length 0 = 1 and print the step length used by each method at each iteration. First try the initial point Xo = (1.2, 1.2) and then the more difficult point Xo = (-1.2, 1). Here is the algorithm for the backtracking line search: Algorithm (Backtracking Line Search with search direction px) Choose a > 0, p (0,1), CE (0,1); set -a; repeat until f(xx + apx) = f(xx)+ca (Vf(),px) end (repeat) Terminate with cd = a

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts