Question: 1. Suppose that coin 1 has probability $0.4$ of coming up heads, and coin 2 has probability $0.8$ of coming up heads. The coin to

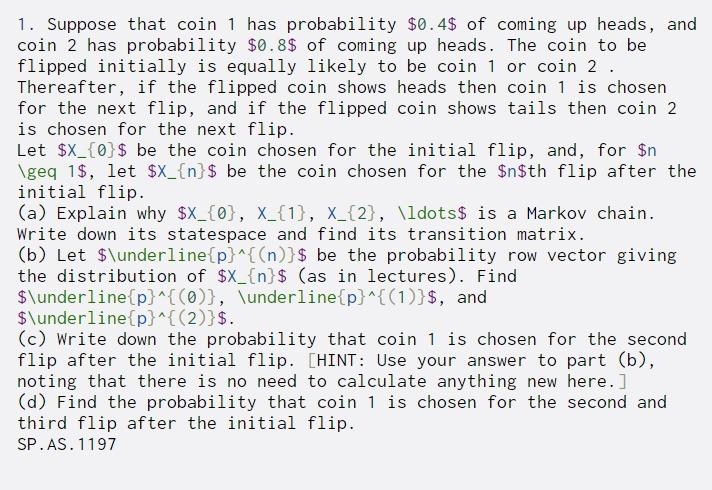

1. Suppose that coin 1 has probability $0.4$ of coming up heads, and coin 2 has probability $0.8$ of coming up heads. The coin to be flipped initially is equally likely to be coin 1 or coin 2 . Thereafter, if the flipped coin shows heads then coin 1 is chosen for the next flip, and if the flipped coin shows tails then coin 2 is chosen for the next flip. Let $X_{0}$ be the coin chosen for the initial flip, and, for $n \geq 1$, let $X_{n}$ be the coin chosen for the $n$th flip after the initial flip. (a) Explain why $X_{0}, X_{1}, X_{2}, \ldots$ is a Markov chain. Write down its statespace and find its transition matrix. (b) Let $\underline{p}^{(n)}$ be the probability row vector giving the distribution of $X_{n} $ (as in lectures). Find $\underline{p}^{(6)}, \underline{p}^{(1)}$, and $\underline{p} ^{(2)}$. (c) Write down the probability that coin 1 is chosen for the second flip after the initial flip. [HINT: Use your answer to part (b), noting that there is no need to calculate anything new here. ] (d) Find the probability that coin 1 is chosen for the second and third flip after the initial flip. SP.AS. 1197

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts