Question: 1. SVM. Consider a supervised learning problem in which the training examples are points in 2-dimensional space. The positive examples (samples in class 1)

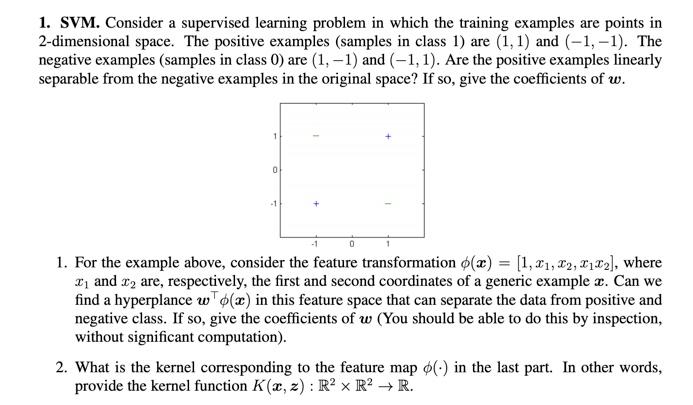

1. SVM. Consider a supervised learning problem in which the training examples are points in 2-dimensional space. The positive examples (samples in class 1) are (1, 1) and (-1,-1). The negative examples (samples in class 0) are (1,-1) and (-1, 1). Are the positive examples linearly separable from the negative examples in the original space? If so, give the coefficients of w. 0 -1 + 0 1. For the example above, consider the feature transformation p(x) = [1, x, x2, 2], where and are, respectively, the first and second coordinates of a generic example x. Can we find a hyperplance wTo(x) in this feature space that can separate the data from positive and negative class. If so, give the coefficients of w (You should be able to do this by inspection, without significant computation). 2. What is the kernel corresponding to the feature map o() in the last part. In other words, provide the kernel function K(x, z): R x R R.

Step by Step Solution

3.57 Rating (157 Votes )

There are 3 Steps involved in it

Answer 1No single line which is drawn can separate ... View full answer

Get step-by-step solutions from verified subject matter experts