Question: (10 pts.) Suppose there is a single machine that processes jobs one at a time. Job i requires ti units of processing time and is

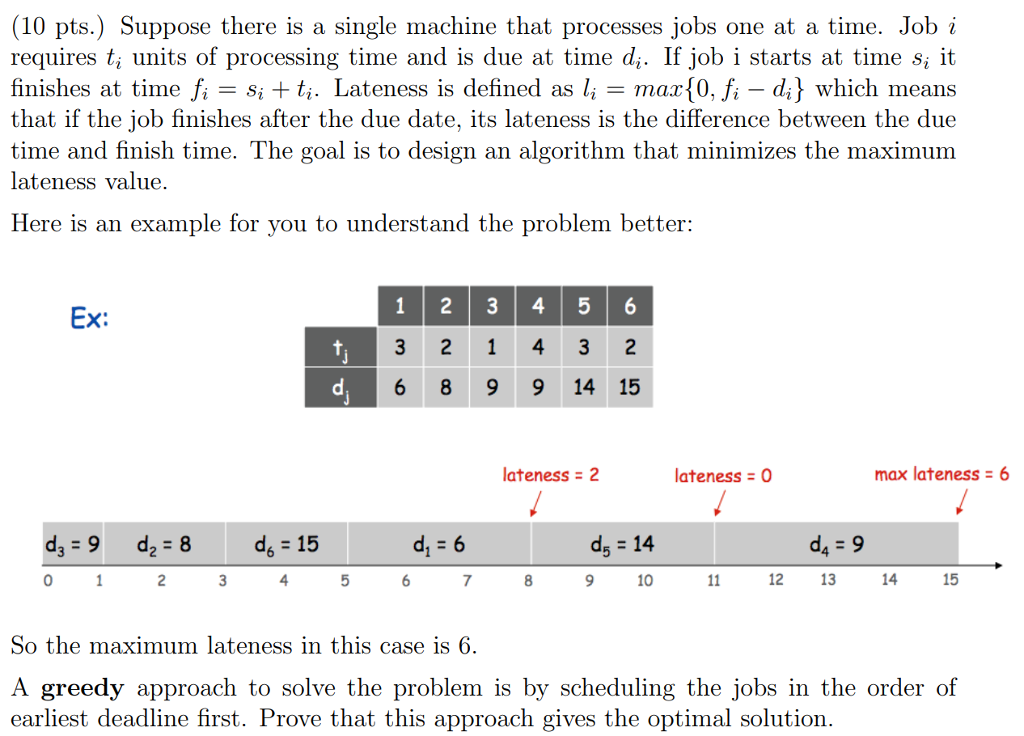

(10 pts.) Suppose there is a single machine that processes jobs one at a time. Job i requires ti units of processing time and is due at time di. If job i starts at time s, it finishes at time fi = sit ti. Lateness is defined as li-max(0,fi-di} which means that if the job finishes after the due date, its lateness is the difference between the due time and finish time. The goal is to design an algorithm that minimizes the maximum lateness value Here is an example for you to understand the problem better: 1 2 3 45 3 2 1 4 3 2 6 8 99 14 15 Ex: lateness 2 ateness 0 max lateness 6 dt-15 d 14 4 7 10 12 13 14 15 So the maximum lateness in this case is 6 A greedy approach to solve the problem is by scheduling the jobs in the order of earliest deadline first. Prove that this approach gives the optimal solution

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts