Question: 2. (a) A Hidden Markov Model (HMM) M has four emitting states: S1, S2, S3 and 54 each of which can emit the symbol a,

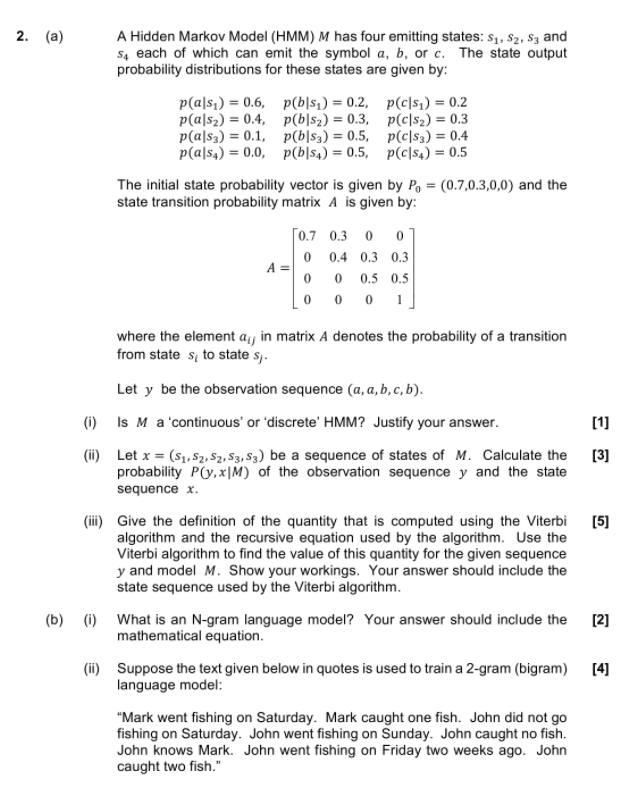

2. (a) A Hidden Markov Model (HMM) M has four emitting states: S1, S2, S3 and 54 each of which can emit the symbol a, b, or c. The state output probability distributions for these states are given by: p(als) = 0.6, p(bls) = 0.2, pc|si) = 0.2 p(a|52) = 0.4, p(b|52) = 0.3, p(c|s2) = 0.3 pa 3) = 0.1, p( 63) = 0.5, pc|53) = 0.4 p(a|s4) = 0.0, p(b|s4) = 0.5, pc|sx) = 0.5 The initial state probability vector is given by P, = (0.7,0.3,0,0) and the state transition probability matrix A is given by: [0.7 0.3 0 0 0 0.4 0.3 0.3 0 0 0.5 0.5 0 0 0 1 where the element aj in matrix A denotes the probability of a transition from state sto state s Let y be the observation sequence (a, a, b,c,b). ) Is M a 'continuous' or 'discrete' HMM? Justify your answer. [1] (ii) Let x = (S1, S2,S2,S3,S3) be a sequence of states of M. Calculate the [3] probability P(y,x|M) of the observation sequence y and the state sequence x. (ii) Give the definition of the quantity that is computed using the Viterbi (5) algorithm and the recursive equation used by the algorithm. Use the Viterbi algorithm to find the value of this quantity for the given sequence y and model M. Show your workings. Your answer should include the state sequence used by the Viterbi algorithm. (b) (0) What is an N-gram language model? Your answer should include the [2] mathematical equation. (ii) Suppose the text given below in quotes is used to train a 2-gram (bigram) [4] language model: "Mark went fishing on Saturday. Mark caught one fish. John did not go fishing on Saturday. John went fishing on Sunday. John caught no fish. John knows Mark. John went fishing on Friday two weeks ago. John caught two fish

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts