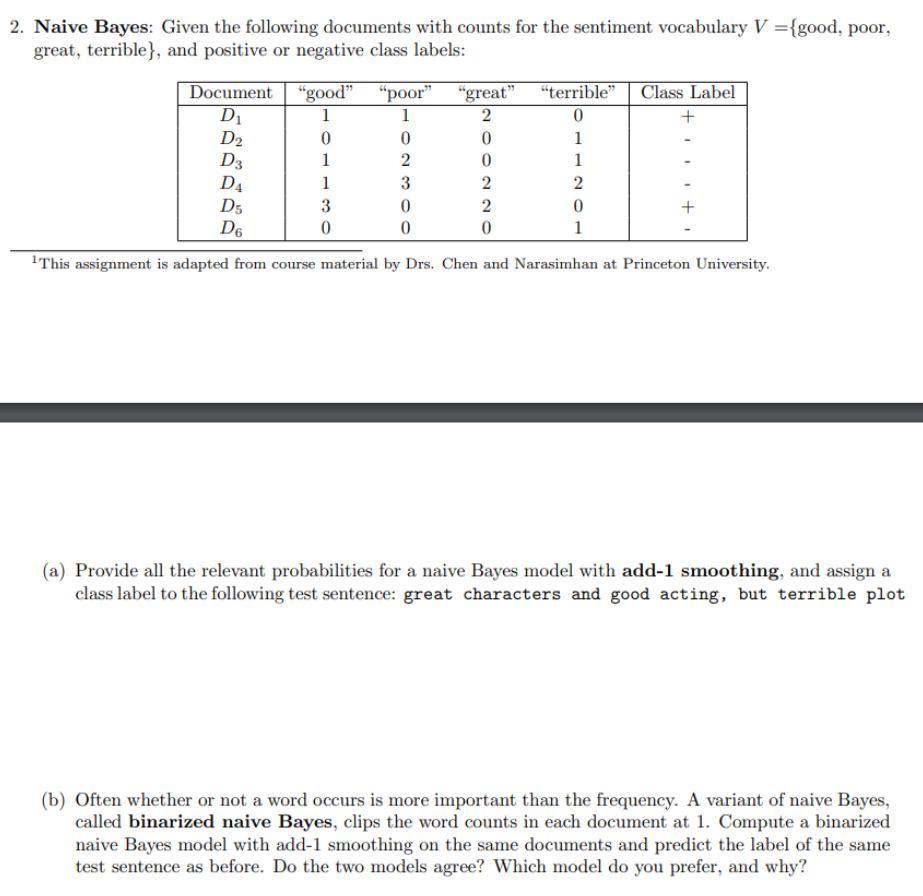

Question: 2. Naive Bayes: Given the following documents with counts for the sentiment vocabulary V ={good, poor, great, terrible}, and positive or negative class labels: Document

2. Naive Bayes: Given the following documents with counts for the sentiment vocabulary V ={good, poor, great, terrible}, and positive or negative class labels: Document D D2 + D3 "good" poor" "great" "terrible Class Label 1 1 2 0 0 0 0 1 1 2 0 1 1 3 2 2 3 0 2 0 + 0 0 0 1 D4 D5 D6 This assignment is adapted from course material by Drs. Chen and Narasimhan at Princeton University. (a) Provide all the relevant probabilities for a naive Bayes model with add-1 smoothing, and assign a class label to the following test sentence: great characters and good acting, but terrible plot (b) Often whether or not a word occurs is more important than the frequency. A variant of naive Bayes, called binarized naive Bayes, clips the word counts in each document at 1. Compute a binarized naive Bayes model with add-1 smoothing on the same documents and predict the label of the same test sentence as before. Do the two models agree? Which model do you prefer, and why

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts