Question: 2 Reducing Variance in Policy Gradient Methods In class, we explored REINFORCE as a policy gradient method with no bias but high variance. In

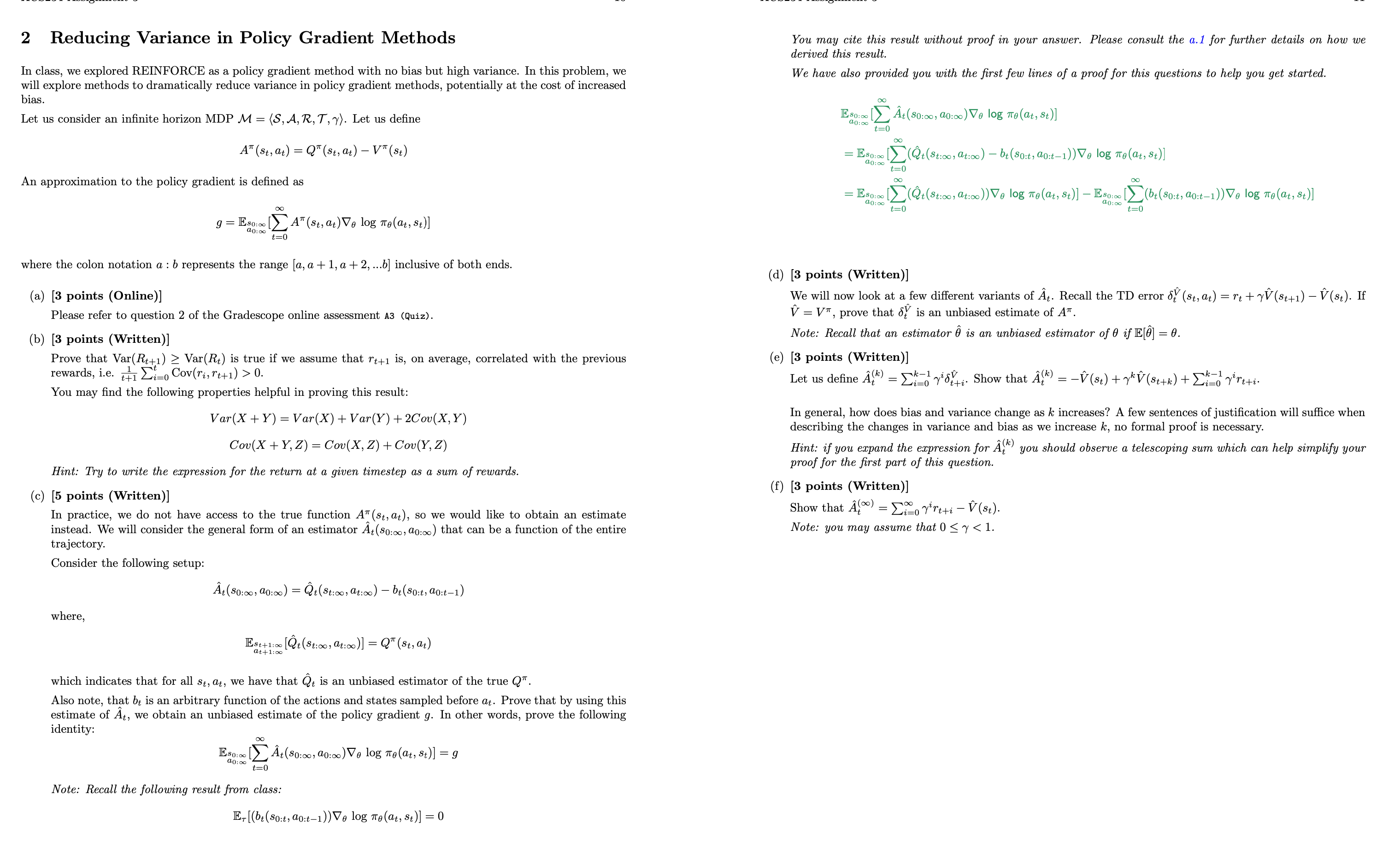

2 Reducing Variance in Policy Gradient Methods In class, we explored REINFORCE as a policy gradient method with no bias but high variance. In this problem, we will explore methods to dramatically reduce variance in policy gradient methods, potentially at the cost of increased bias. Let us consider an infinite horizon MDP M = (S, A, R, T, y). Let us define AT (St, at) = Q (st, at) V (st) An approximation to the policy gradient is defined as 9 = Eso: a0:00 where the colon notation a : b represents the range [a, a +1, a + 2, ...b] inclusive of both ends. (a) [3 points (Online)] Please refer to question 2 of the Gradescope online assessment A3 (Quiz). where, (b) [3 points (Written)] Prove that Var (R++1) Var(Rt) is true if we assume that rt+1 is, on average, correlated with the previous rewards, i.e. i0 Cov(ri, rt+1) > 0. You may find the following properties helpful in proving this result: [A (st, at)Ve log (at, st)] t=0 Var (X +Y) = Var(X) + Var (Y) + 2Cov(X, Y) Cov(X + Y,Z) = Cov(X, Z) + Cov(Y, Z) Hint: Try to write the expression for the return at a given timestep as a sum of rewards. (c) [5 points (Written)] In practice, we do not have access to the true function A (st, at), so we would like to obtain an estimate instead. We will consider the general form of an estimator At($0:, a0:) that can be a function of the entire trajectory. Consider the following setup: t ($0:00, 90:00) Est+1:00 (t (St:, at:)] = Q (St, at) at+1:00 Es0:00 a0:00 which indicates that for all st, at, we have that t is an unbiased estimator of the true Q. Also note, that b, is an arbitrary function of the actions and states sampled before at. Prove that by using this estimate of t, we obtain an unbiased estimate of the policy gradient g. In other words, prove the following identity: t=0 = Qt (St:, at:) - bt (so:t, 0:t-1) t($0:, 0:) V log (at, st)] = g 0 Note: Recall the following result from class: Er [(bt (so:t, ao:t-1))V log (t, St)] = 0 You may cite this result without proof in your answer. Please consult the a.1 for further details on how we derived this result. We have also provided you with the first few lines of a proof for this questions to help you get started. Es0:00 a0:00 [ t($0:, 0:) V log e(at, St)] t=0 = E$0:00 a0:00 = Es0:00 a0:00 [(Qt (St:, at:0) bt (S0:t, 0:t1 t=0 [(t (St:, at:))V log (at, St)] - Eso: [(bt (80:t, a0:t-1)) V log (a, st)] a0:00 t=0 t=0 1)) V log (t, st)] 0 (d) [3 points (Written)] We will now look at a few different variants of . Recall the TD error S (st, at) = rt + Y (St+1) (st). If V = V", prove that d is an unbiased estimate of A. Note: Recall that an estimator is an unbiased estimator of 0 if E[] = 0. (e) [3 points (Written)] Let us define (k) = kly. Show that (k) = (st) + y^(St+k) + k=1' yrtti. i=0 t+i. i=0 In general, how does bias and variance change as k increases? A few sentences of justification will suffice when describing the changes in variance and bias as we increase k, no formal proof is necessary. Hint: if you expand the expression for (k) you should observe a telescoping sum which can help simplify your proof for the first part of this question. (f) [3 points (Written)] Show that A() = o Vrt+i V (st). Note: you may assume that 0 y < 1.

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts