Question: 2. (*) Suppose that a source X is represented by the tossing of an unfair coin, where one side of the coin is heavier than

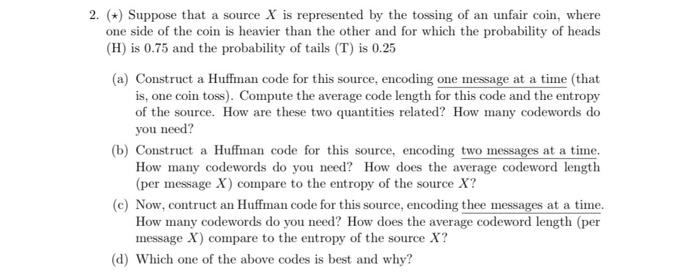

2. (*) Suppose that a source X is represented by the tossing of an unfair coin, where one side of the coin is heavier than the other and for which the probability of heads (H) is 0.75 and the probability of tails (T) is 0.25 (a) Construct a Huffinan code for this source, encoding one message at a time (that is, one coin toss). Compute the average code length for this code and the entropy of the source. How are these two quantities related? How many codewords do you need? (b) Construct a Huffman code for this source, encoding two messages at a time. How many codewords do you need? How does the average codeword length (per message X) compare to the entropy of the source X? (C) Now, contruct an Huffman code for this source, encoding thee messages at a time. How many codewords do you need? How does the average codeword length (per message X) compare to the entropy of the source X? (d) Which one of the above codes is best and why

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts