Question: (20 points) We consider support vector machines (SVMs) for a 2-class classification setting. Let X={(xi,yi),,(xn,yn)} be a dataset for 2-class classification, where xiRd and yi{1,1}.

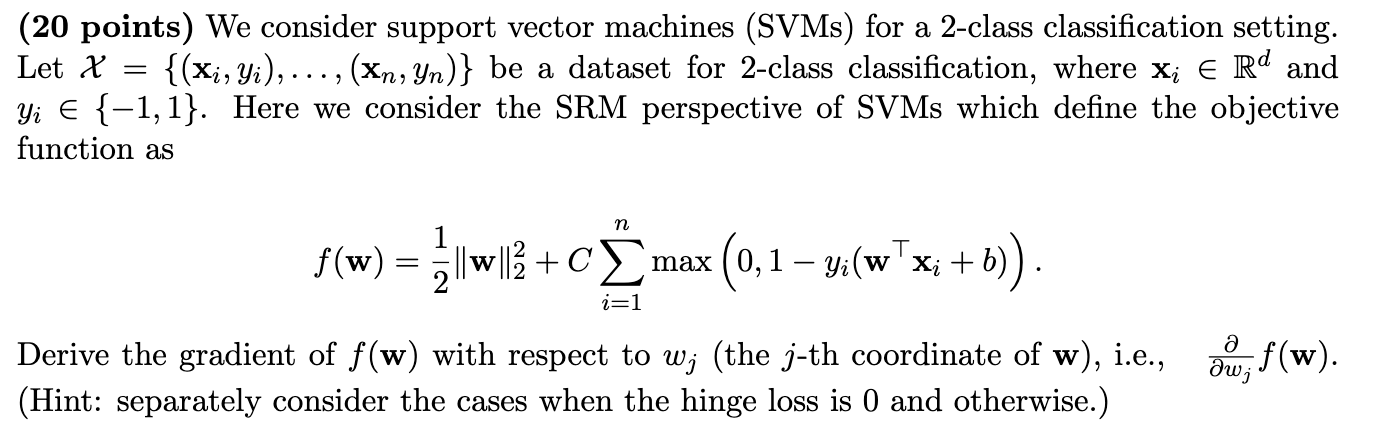

(20 points) We consider support vector machines (SVMs) for a 2-class classification setting. Let X={(xi,yi),,(xn,yn)} be a dataset for 2-class classification, where xiRd and yi{1,1}. Here we consider the SRM perspective of SVMs which define the objective function as f(w)=21w22+Ci=1nmax(0,1yi(wxi+b)). Derive the gradient of f(w) with respect to wj (the j-th coordinate of w ), i.e., wjf(w). (Hint: separately consider the cases when the hinge loss is 0 and otherwise.)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts