Question: (20 points) We have a simple feedforward neural network with the structure shown below (one input layer with two nodes x1 and x2, one hidden

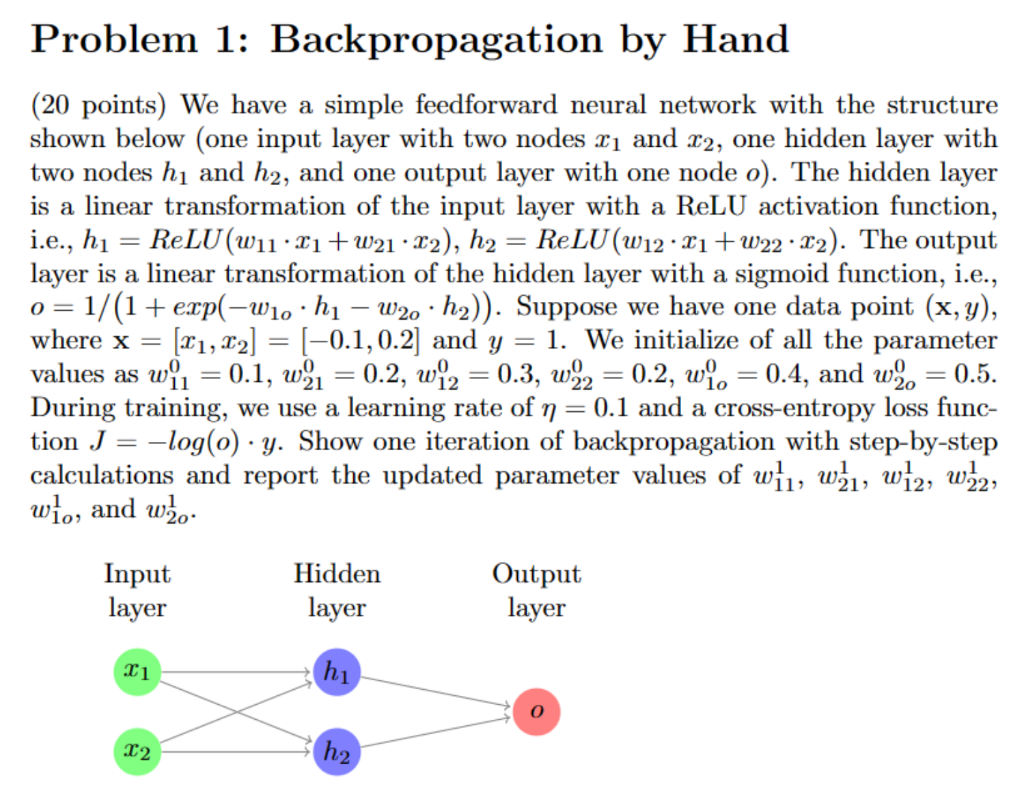

(20 points) We have a simple feedforward neural network with the structure shown below (one input layer with two nodes x1 and x2, one hidden layer with two nodes h1 and h2, and one output layer with one node o ). The hidden layer is a linear transformation of the input layer with a ReLU activation function, i.e., h1=ReLU(w11x1+w21x2),h2=ReLU(w12x1+w22x2). The output layer is a linear transformation of the hidden layer with a sigmoid function, i.e., o=1/(1+exp(w1oh1w2oh2)). Suppose we have one data point (x,y), where x=[x1,x2]=[0.1,0.2] and y=1. We initialize of all the parameter values as w110=0.1,w210=0.2,w120=0.3,w220=0.2,w1o0=0.4, and w2o0=0.5. During training, we use a learning rate of =0.1 and a cross-entropy loss function J=log(o)y. Show one iteration of backpropagation with step-by-step calculations and report the updated parameter values of w111,w211,w121,w221, w1o1, and w2o1

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts