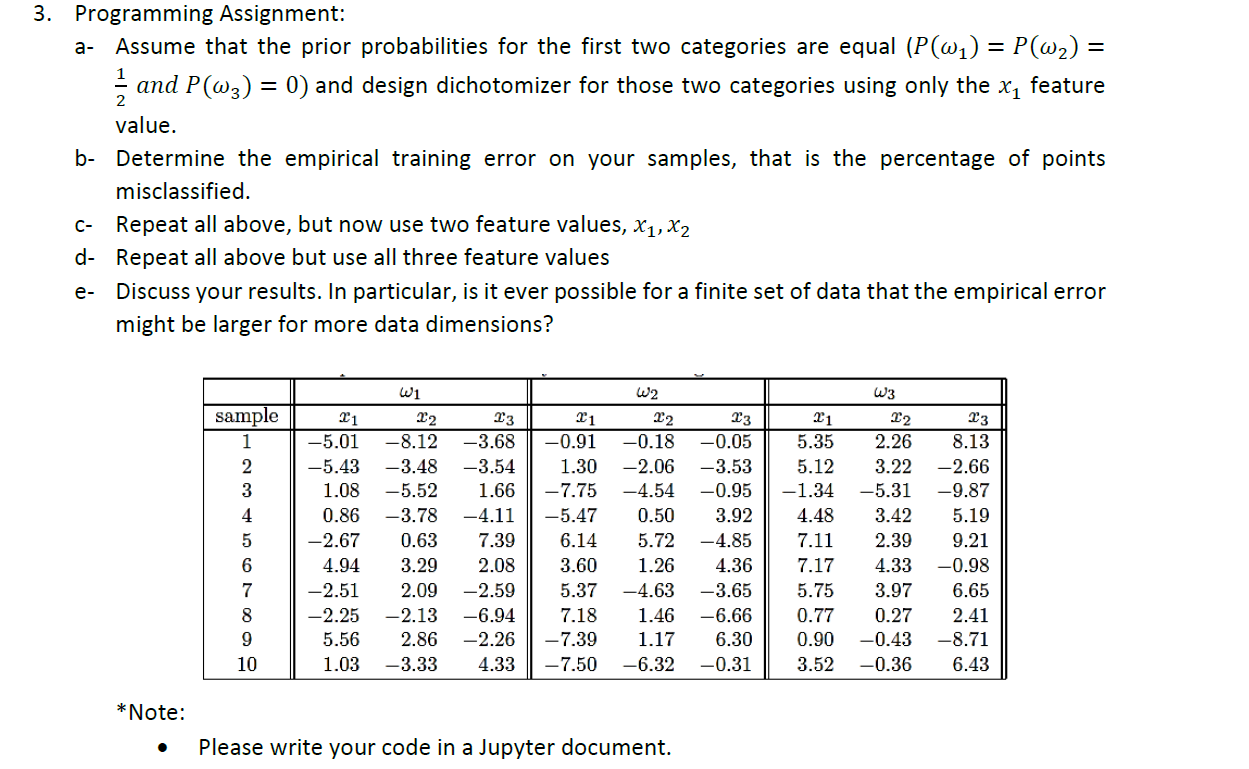

Question: 3. a- Programming Assignment: Assume that the prior probabilities for the first two categories are equal (P(wi) = P(w2) = and P(W3) = 0) and

3. a- Programming Assignment: Assume that the prior probabilities for the first two categories are equal (P(wi) = P(w2) = and P(W3) = 0) and design dichotomizer for those two categories using only the xq feature value. b- Determine the empirical training error on your samples, that is the percentage of points misclassified. C- Repeat all above, but now use two feature values, X1, X2 d- Repeat all above but use all three feature values Discuss your results. In particular, is it ever possible for a finite set of data that the empirical error might be larger for more data dimensions? e- W3 sample 1 2 3 4 5 6 7 8 9 10 21 -5.01 -5.43 1.08 0.86 -2.67 4.94 -2.51 -2.25 5.56 1.03 22 -8.12 -3.48 -5.52 -3.78 0.63 3.29 2.09 -2.13 2.86 -3.33 23 -3.68 -3.54 1.66 -4.11 7.39 2.08 -2.59 -6.94 -2.26 4.33 21 -0.91 1.30 -7.75 -5.47 6.14 W2 22 -0.18 -2.06 -4.54 0.50 5.72 1.26 -4.63 1.46 1.17 -6.32 23 -0.05 -3.53 -0.95 3.92 -4.85 4.36 -3.65 -6.66 6.30 -0.31 21 5.35 5.12 -1.34 4.48 7.11 7.17 5.75 0.77 0.90 3.52 22 23 2.26 8.13 3.22 -2.66 -5.31 -9.87 3.42 5.19 2.39 9.21 4.33 -0.98 3.97 6.65 0.27 2.41 -0.43 -8.71 -0.36 6.43 3.60 5.37 7.18 -7.39 - 7.50 *Note: Please write your code in a Jupyter document. 3. a- Programming Assignment: Assume that the prior probabilities for the first two categories are equal (P(wi) = P(w2) = and P(W3) = 0) and design dichotomizer for those two categories using only the xq feature value. b- Determine the empirical training error on your samples, that is the percentage of points misclassified. C- Repeat all above, but now use two feature values, X1, X2 d- Repeat all above but use all three feature values Discuss your results. In particular, is it ever possible for a finite set of data that the empirical error might be larger for more data dimensions? e- W3 sample 1 2 3 4 5 6 7 8 9 10 21 -5.01 -5.43 1.08 0.86 -2.67 4.94 -2.51 -2.25 5.56 1.03 22 -8.12 -3.48 -5.52 -3.78 0.63 3.29 2.09 -2.13 2.86 -3.33 23 -3.68 -3.54 1.66 -4.11 7.39 2.08 -2.59 -6.94 -2.26 4.33 21 -0.91 1.30 -7.75 -5.47 6.14 W2 22 -0.18 -2.06 -4.54 0.50 5.72 1.26 -4.63 1.46 1.17 -6.32 23 -0.05 -3.53 -0.95 3.92 -4.85 4.36 -3.65 -6.66 6.30 -0.31 21 5.35 5.12 -1.34 4.48 7.11 7.17 5.75 0.77 0.90 3.52 22 23 2.26 8.13 3.22 -2.66 -5.31 -9.87 3.42 5.19 2.39 9.21 4.33 -0.98 3.97 6.65 0.27 2.41 -0.43 -8.71 -0.36 6.43 3.60 5.37 7.18 -7.39 - 7.50 *Note: Please write your code in a Jupyter document

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts