Question: (30) 2. A decision maker observes a discrete time system which moves between states {1, 2, 3, 4} according to the following transition probability matrix:

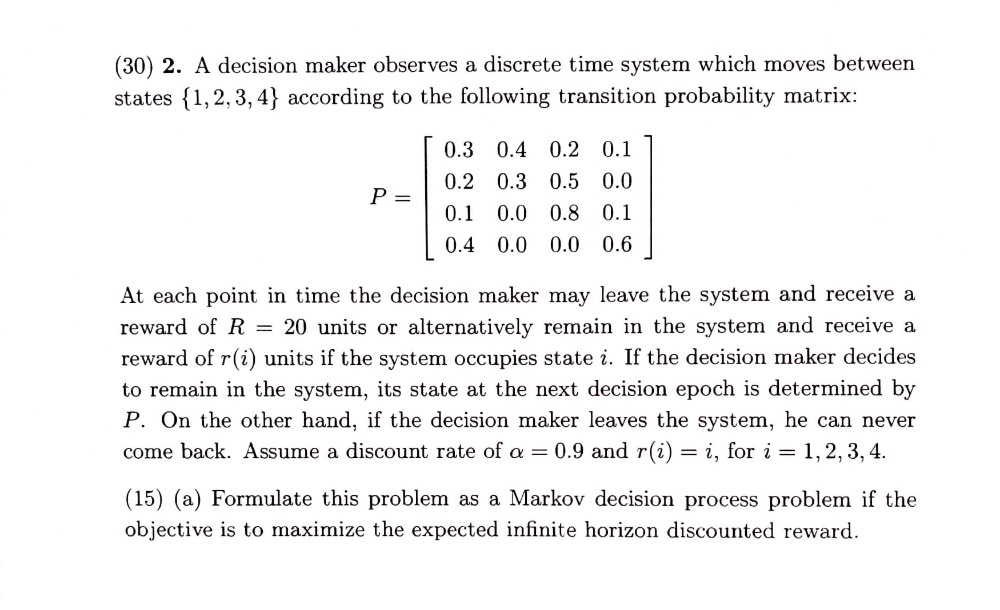

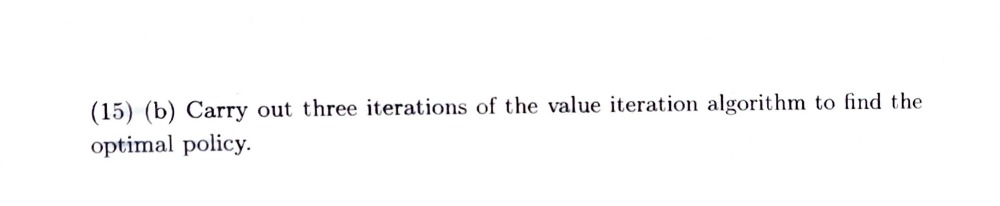

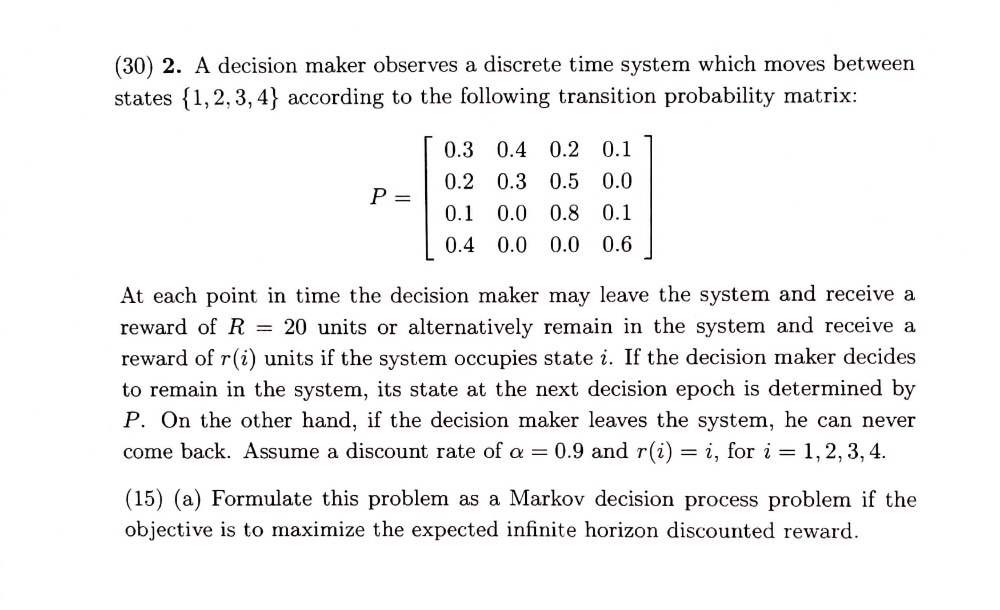

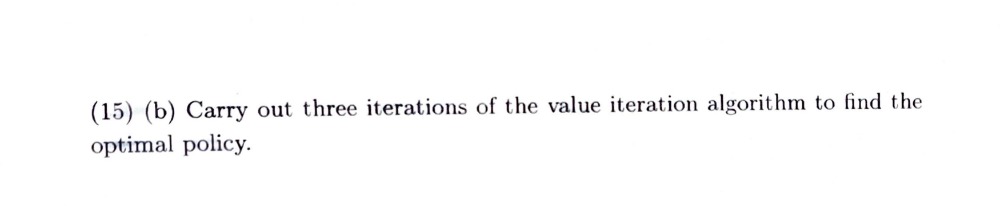

(30) 2. A decision maker observes a discrete time system which moves between states {1, 2, 3, 4} according to the following transition probability matrix: 0.3 0.4 0.2 0.1 0.2 0.3 0.5 0.0 P = 0.1 0.0 0.8 0.1 0.4 0.0 0.0 0.6 At each point in time the decision maker may leave the system and receive a reward of R = 20 units or alternatively remain in the system and receive a reward of r(i) units if the system occupies state i. If the decision maker decides to remain in the system, its state at the next decision epoch is determined by P. On the other hand, if the decision maker leaves the system, he can never come back. Assume a discount rate of o = 0.9 and r(i) = i, for i = 1, 2, 3, 4. (15) (a) Formulate this problem as a Markov decision process problem if the objective is to maximize the expected infinite horizon discounted reward.(15) (b) Carry out three iterations of the value iteration algorithm to find the optimal policy

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts