Question: 4 . 2 If we keep the hidden layer parameters above fixed but add and train additional hidden layers ( applied after this layer )

If we keep the hidden layer parameters above fixed but add and train additional hidden layers applied after

this layer to further transform the data, could the resulting neural network solve this classification problem?

yes

no

Suppose we stick to the layer architecture but add many more ReLU hidden units, all of them without offset

parameters. Would it be possible to train such a model to perfectly separate these points?

Note : Assume that no data points lie on the same line through the origin.

yes

no Which of the following statements is correct?

The gradient calculated in the backpropagation algorithm consists of the partial derivatives of the loss

function with respect to each network weight.

True

False

Initialization of the parameters is often important when training large feedforward neural networks.

If weights in a neural network with sigmoid units are initialized to close to zero values, then during early

stochastic gradient descent steps, the network represents a nearly linear function of the inputs.

True

False

On the other hand, if we randomly set all the weights to very large values, or don't scale them properly

with the number of units in the layer below, then the sigmoid units would behave like sign units. Here,

"behave like sign units" allows for shifting or rescaling of the sign function.

Note that a sign unit is a unit with activation function sign if and sign if

For the purpose of this question, it does not matter what sign is

True

False

If we use only sign units in a feedforward neural network, then the stochastic gradient descent update

will

almost never change any of the weights

change the weights by large amounts at random

Stochastic gradient descent differs from true gradient descent by updating only one network weight

during each gradient descent step.

True

False There are many good reasons to use convolutional layers in CNNs as opposed to replacing them with fully

connected layers. Please check T or F for each statement.

Since we apply the same convolutional filter throughout the image, we can learn to recognize the same feature

wherever it appears.

True

False

A fully connected layer for an image has more parameters than the size of image.

True

False

A fully connected layer can learn to recognize features anywhere in the image even if the features appeared

preferentially in one location during training

True

Falsedefined in the figure below. Note that hidden units have no offset parameters in this problem.

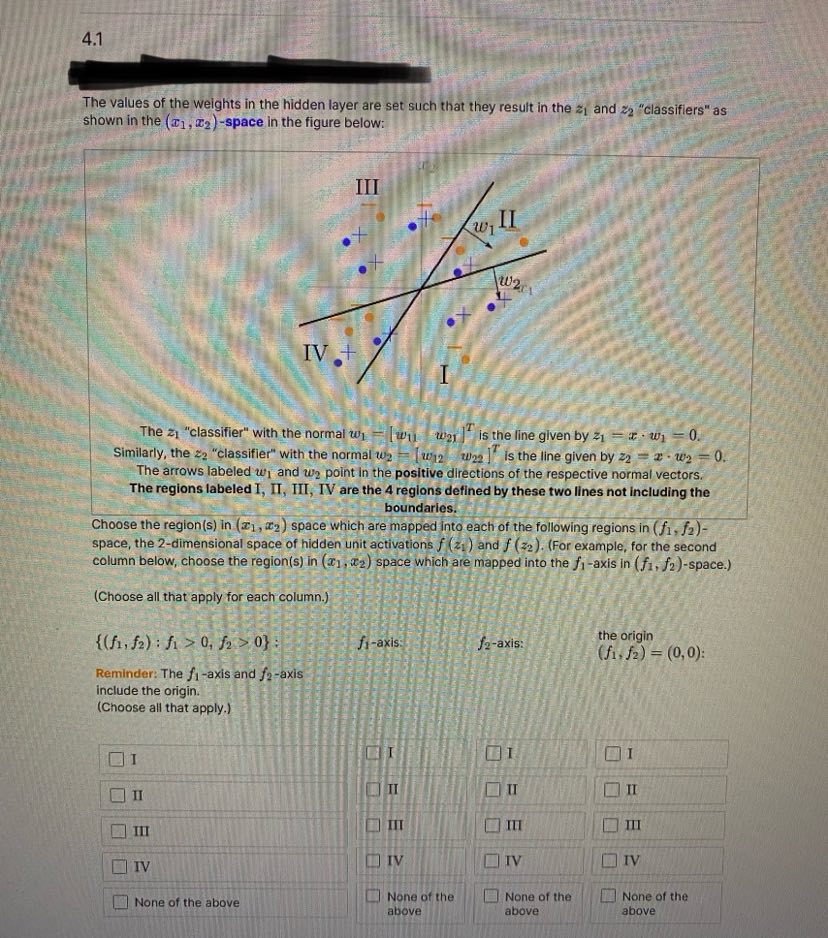

maxThe values of the weights in the hidden layer are set such that they result in the and "classifiers" as

shown in the space in the figure below:

The "classifier" with the normal is the line given by

Similarly, the "classifier" with the normal is the line given by

The arrows labeled and point in the positive directions of the respective normal vectors.

The regions labeled I, II III, IV are the regions defined by these two lines not including the

boundaries.

Choose the regions in space which are mapped into each of the following regions in

space, the dimensional space of hidden unit activations and For example, for the second

column below, choose the regions in space which are mapped into the axis in space.

Choose all that apply for each column.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock